This is the multi-page printable view of this section. Click here to print.

Kubernetes Blog

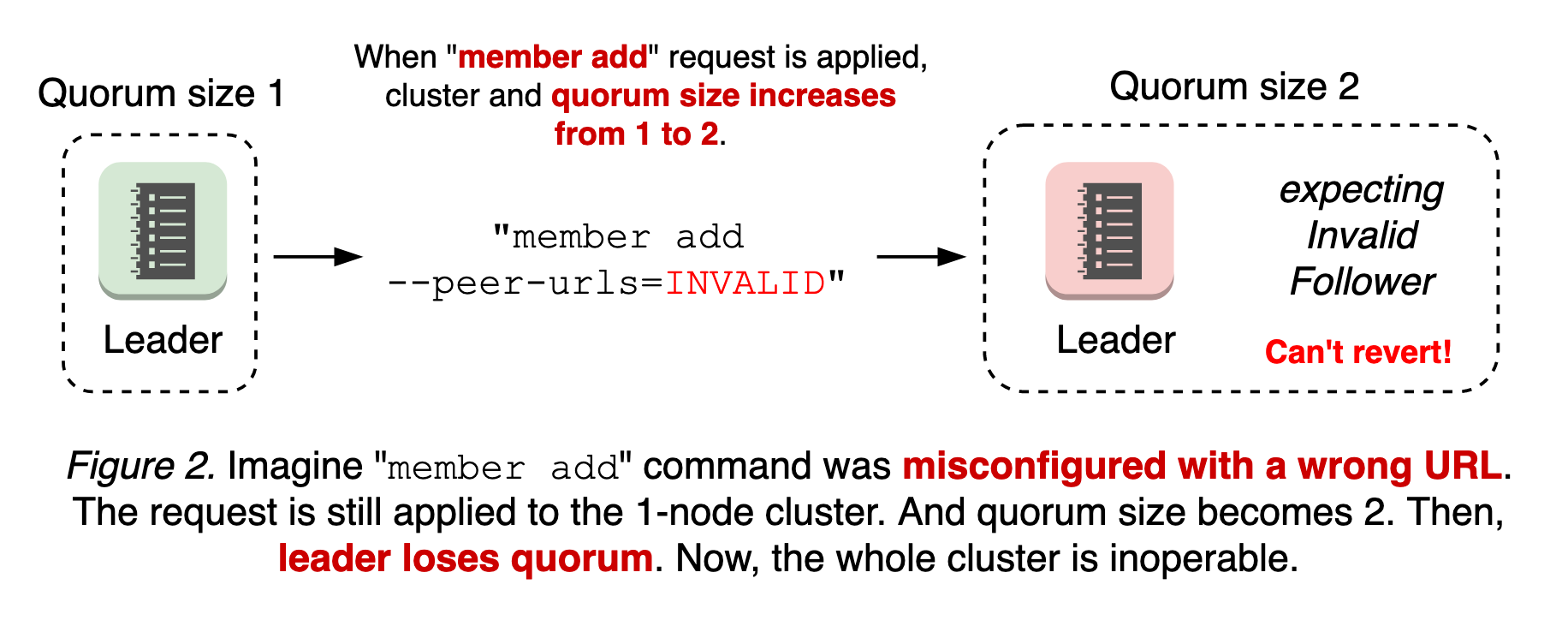

- Kubernetes v1.31: kubeadm v1beta4

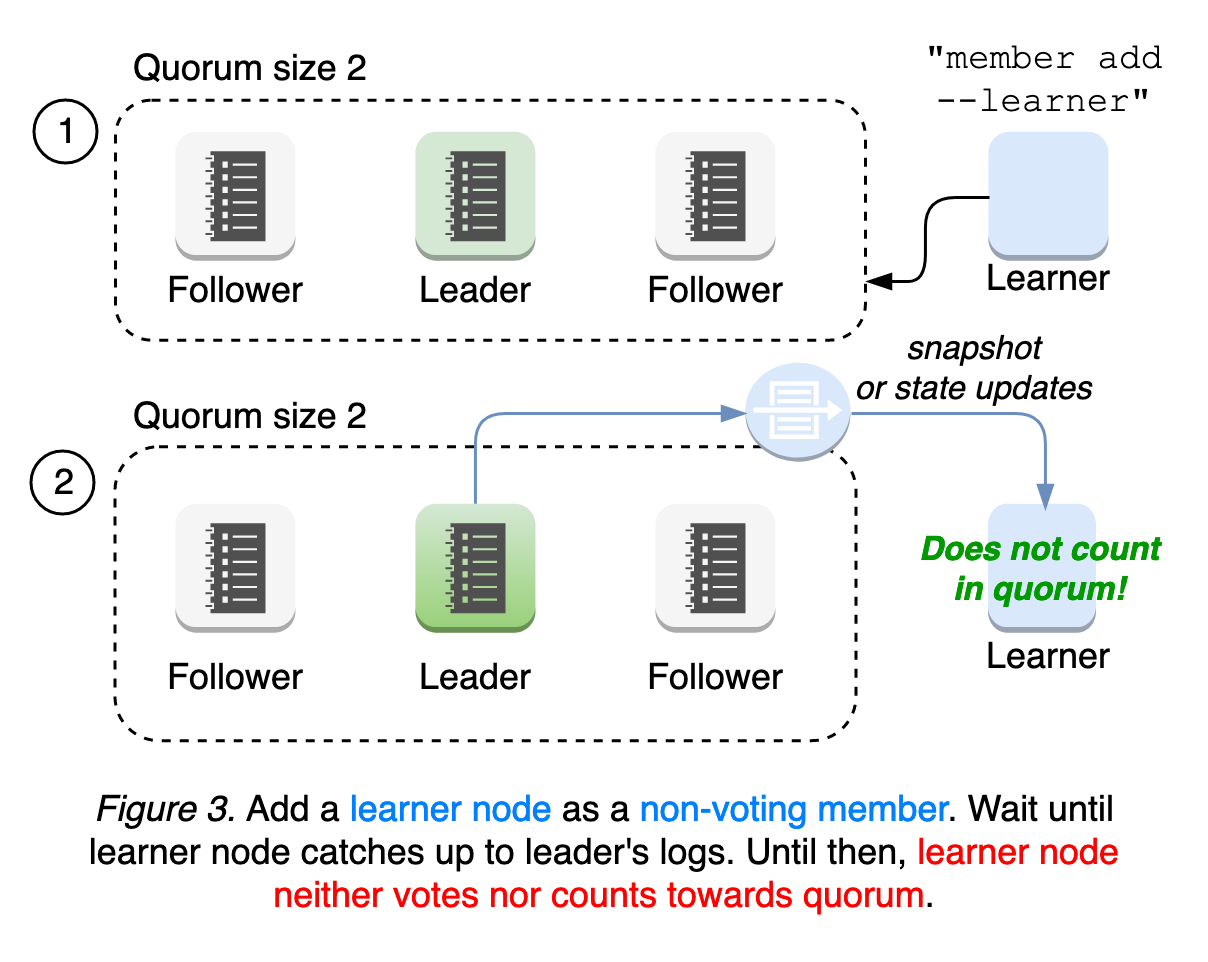

- Kubernetes 1.31: Custom Profiling in Kubectl Debug Graduates to Beta

- Kubernetes 1.31: Fine-grained SupplementalGroups control

- Kubernetes v1.31: New Kubernetes CPUManager Static Policy: Distribute CPUs Across Cores

- Kubernetes 1.31: Autoconfiguration For Node Cgroup Driver (beta)

- Kubernetes 1.31: Streaming Transitions from SPDY to WebSockets

- Kubernetes 1.31: Pod Failure Policy for Jobs Goes GA

- Kubernetes 1.31: MatchLabelKeys in PodAffinity graduates to beta

- Kubernetes 1.31: Prevent PersistentVolume Leaks When Deleting out of Order

- Kubernetes 1.31: Read Only Volumes Based On OCI Artifacts (alpha)

- Kubernetes 1.31: VolumeAttributesClass for Volume Modification Beta

- Kubernetes v1.31: Accelerating Cluster Performance with Consistent Reads from Cache

- Kubernetes 1.31: Moving cgroup v1 Support into Maintenance Mode

- Kubernetes v1.31: PersistentVolume Last Phase Transition Time Moves to GA

- Kubernetes v1.31: Elli

- Introducing Feature Gates to Client-Go: Enhancing Flexibility and Control

- Spotlight on SIG API Machinery

- Kubernetes Removals and Major Changes In v1.31

- Spotlight on SIG Node

- 10 Years of Kubernetes

- Completing the largest migration in Kubernetes history

- Gateway API v1.1: Service mesh, GRPCRoute, and a whole lot more

- Container Runtime Interface streaming explained

- Kubernetes 1.30: Preventing unauthorized volume mode conversion moves to GA

- Kubernetes 1.30: Multi-Webhook and Modular Authorization Made Much Easier

- Kubernetes 1.30: Structured Authentication Configuration Moves to Beta

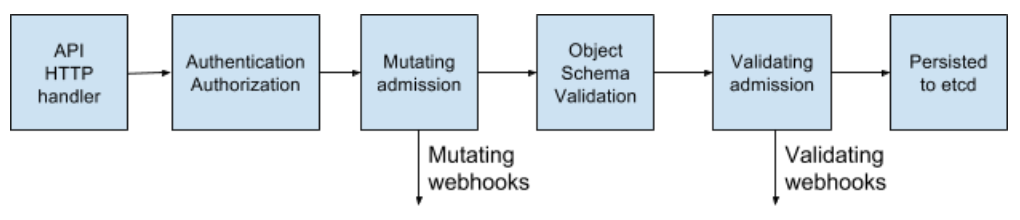

- Kubernetes 1.30: Validating Admission Policy Is Generally Available

- Kubernetes 1.30: Read-only volume mounts can be finally literally read-only

- Kubernetes 1.30: Beta Support For Pods With User Namespaces

- Kubernetes v1.30: Uwubernetes

- Spotlight on SIG Architecture: Code Organization

- DIY: Create Your Own Cloud with Kubernetes (Part 3)

- DIY: Create Your Own Cloud with Kubernetes (Part 2)

- DIY: Create Your Own Cloud with Kubernetes (Part 1)

- Introducing the Windows Operational Readiness Specification

- A Peek at Kubernetes v1.30

- CRI-O: Applying seccomp profiles from OCI registries

- Spotlight on SIG Cloud Provider

- A look into the Kubernetes Book Club

- Image Filesystem: Configuring Kubernetes to store containers on a separate filesystem

- Spotlight on SIG Release (Release Team Subproject)

- Contextual logging in Kubernetes 1.29: Better troubleshooting and enhanced logging

- Kubernetes 1.29: Decoupling taint-manager from node-lifecycle-controller

- Kubernetes 1.29: PodReadyToStartContainers Condition Moves to Beta

- Kubernetes 1.29: New (alpha) Feature, Load Balancer IP Mode for Services

- Kubernetes 1.29: Single Pod Access Mode for PersistentVolumes Graduates to Stable

- Kubernetes 1.29: CSI Storage Resizing Authenticated and Generally Available in v1.29

- Kubernetes 1.29: VolumeAttributesClass for Volume Modification

- Kubernetes 1.29: Cloud Provider Integrations Are Now Separate Components

- Kubernetes v1.29: Mandala

- New Experimental Features in Gateway API v1.0

- Spotlight on SIG Testing

- Kubernetes Removals, Deprecations, and Major Changes in Kubernetes 1.29

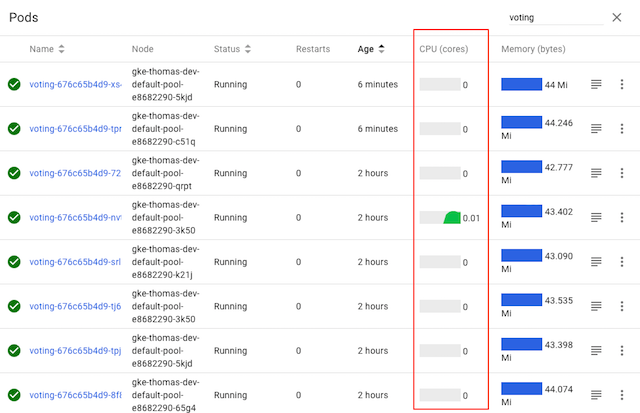

- The Case for Kubernetes Resource Limits: Predictability vs. Efficiency

- Introducing SIG etcd

- Kubernetes Contributor Summit: Behind-the-scenes

- Spotlight on SIG Architecture: Production Readiness

- Gateway API v1.0: GA Release

- Introducing ingress2gateway; Simplifying Upgrades to Gateway API

- Plants, process and parties: the Kubernetes 1.28 release interview

- PersistentVolume Last Phase Transition Time in Kubernetes

- A Quick Recap of 2023 China Kubernetes Contributor Summit

- Bootstrap an Air Gapped Cluster With Kubeadm

- CRI-O is moving towards pkgs.k8s.io

- Spotlight on SIG Architecture: Conformance

- Announcing the 2023 Steering Committee Election Results

- Happy 7th Birthday kubeadm!

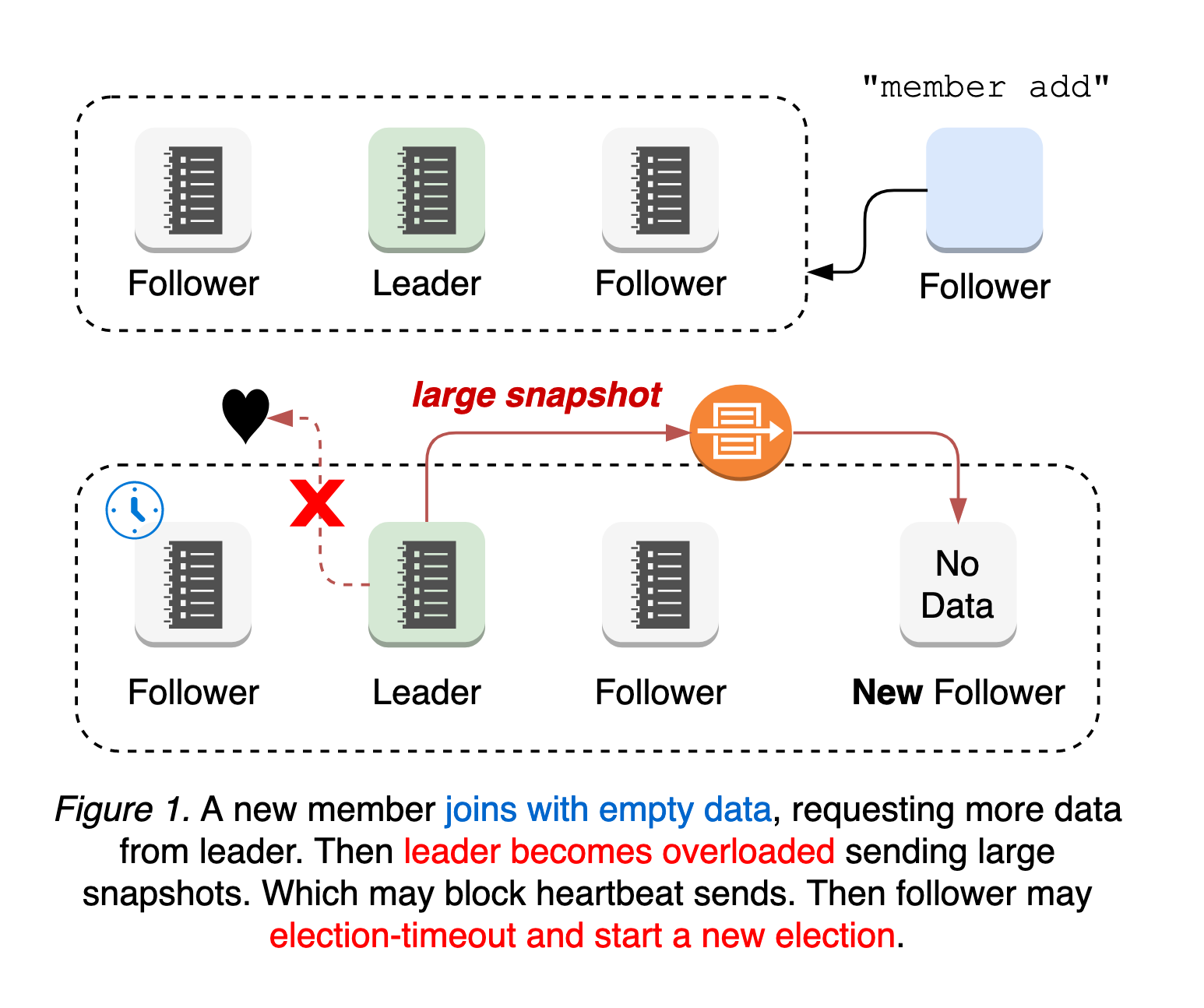

- kubeadm: Use etcd Learner to Join a Control Plane Node Safely

- User Namespaces: Now Supports Running Stateful Pods in Alpha!

- Comparing Local Kubernetes Development Tools: Telepresence, Gefyra, and mirrord

- Kubernetes Legacy Package Repositories Will Be Frozen On September 13, 2023

- Gateway API v0.8.0: Introducing Service Mesh Support

- Kubernetes 1.28: A New (alpha) Mechanism For Safer Cluster Upgrades

- Kubernetes v1.28: Introducing native sidecar containers

- Kubernetes 1.28: Beta support for using swap on Linux

- Kubernetes 1.28: Node podresources API Graduates to GA

- Kubernetes 1.28: Improved failure handling for Jobs

- Kubernetes v1.28: Retroactive Default StorageClass move to GA

- Kubernetes 1.28: Non-Graceful Node Shutdown Moves to GA

- pkgs.k8s.io: Introducing Kubernetes Community-Owned Package Repositories

- Kubernetes v1.28: Planternetes

- Spotlight on SIG ContribEx

- Spotlight on SIG CLI

- Confidential Kubernetes: Use Confidential Virtual Machines and Enclaves to improve your cluster security

- Verifying Container Image Signatures Within CRI Runtimes

- dl.k8s.io to adopt a Content Delivery Network

- Using OCI artifacts to distribute security profiles for seccomp, SELinux and AppArmor

- Having fun with seccomp profiles on the edge

- Kubernetes 1.27: KMS V2 Moves to Beta

- Kubernetes 1.27: updates on speeding up Pod startup

- Kubernetes 1.27: In-place Resource Resize for Kubernetes Pods (alpha)

- Kubernetes 1.27: Avoid Collisions Assigning Ports to NodePort Services

- Kubernetes 1.27: Safer, More Performant Pruning in kubectl apply

- Kubernetes 1.27: Introducing An API For Volume Group Snapshots

- Kubernetes 1.27: Quality-of-Service for Memory Resources (alpha)

- Kubernetes 1.27: StatefulSet PVC Auto-Deletion (beta)

- Kubernetes 1.27: HorizontalPodAutoscaler ContainerResource type metric moves to beta

- Kubernetes 1.27: StatefulSet Start Ordinal Simplifies Migration

- Updates to the Auto-refreshing Official CVE Feed

- Kubernetes 1.27: Server Side Field Validation and OpenAPI V3 move to GA

- Kubernetes 1.27: Query Node Logs Using The Kubelet API

- Kubernetes 1.27: Single Pod Access Mode for PersistentVolumes Graduates to Beta

- Kubernetes 1.27: Efficient SELinux volume relabeling (Beta)

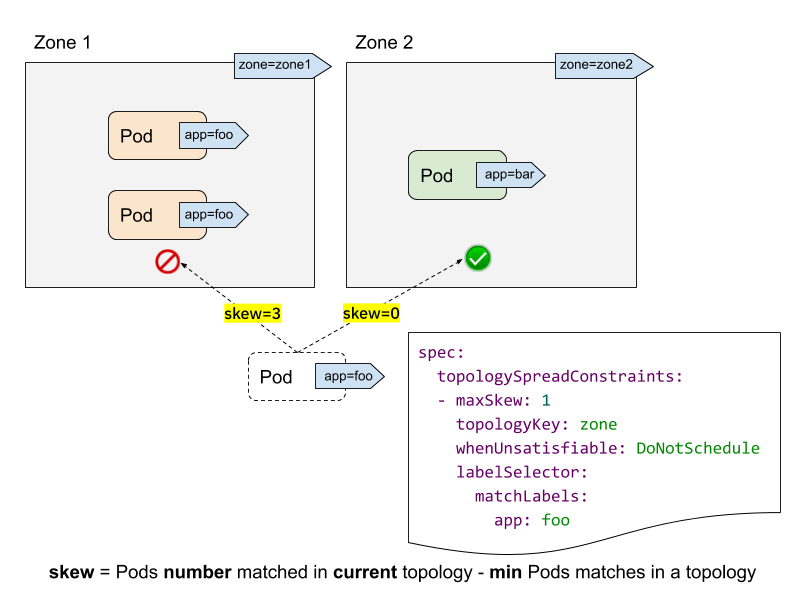

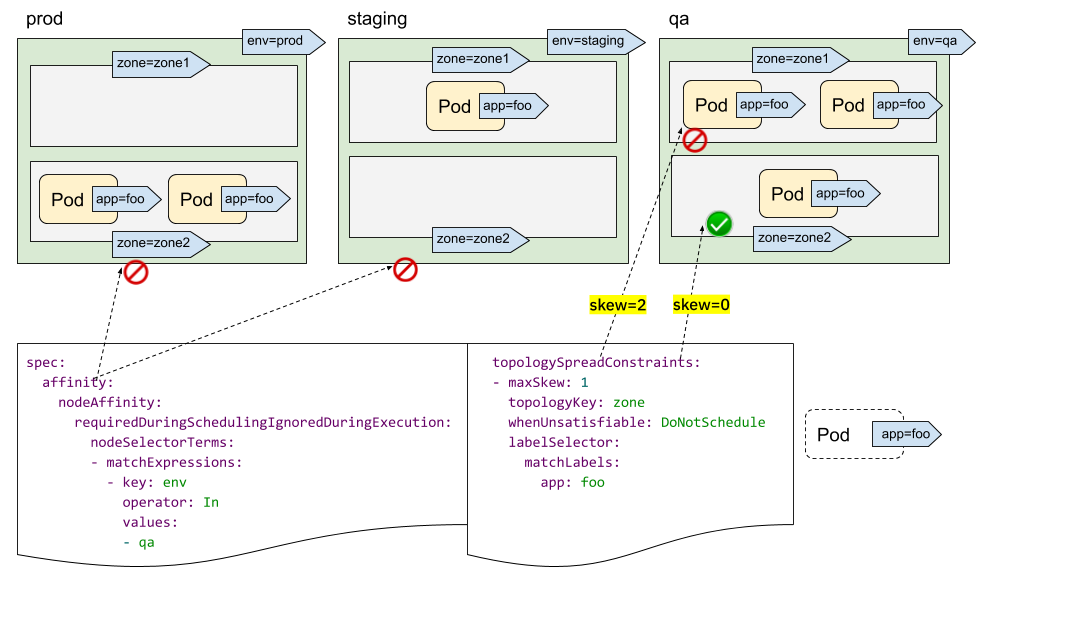

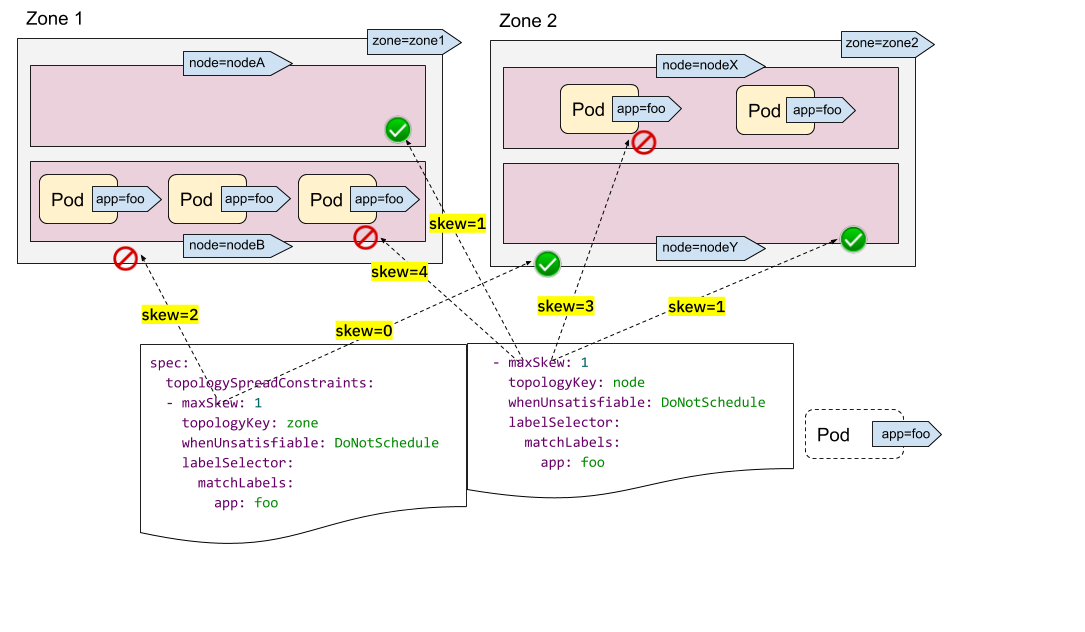

- Kubernetes 1.27: More fine-grained pod topology spread policies reached beta

- Kubernetes v1.27: Chill Vibes

- Keeping Kubernetes Secure with Updated Go Versions

- Kubernetes Validating Admission Policies: A Practical Example

- Kubernetes Removals and Major Changes In v1.27

- k8s.gcr.io Redirect to registry.k8s.io - What You Need to Know

- Forensic container analysis

- Introducing KWOK: Kubernetes WithOut Kubelet

- Free Katacoda Kubernetes Tutorials Are Shutting Down

- k8s.gcr.io Image Registry Will Be Frozen From the 3rd of April 2023

- Spotlight on SIG Instrumentation

- Consider All Microservices Vulnerable — And Monitor Their Behavior

- Protect Your Mission-Critical Pods From Eviction With PriorityClass

- Kubernetes 1.26: Eviction policy for unhealthy pods guarded by PodDisruptionBudgets

- Kubernetes v1.26: Retroactive Default StorageClass

- Kubernetes v1.26: Alpha support for cross-namespace storage data sources

- Kubernetes v1.26: Advancements in Kubernetes Traffic Engineering

- Kubernetes 1.26: Job Tracking, to Support Massively Parallel Batch Workloads, Is Generally Available

- Kubernetes v1.26: CPUManager goes GA

- Kubernetes 1.26: Pod Scheduling Readiness

- Kubernetes 1.26: Support for Passing Pod fsGroup to CSI Drivers At Mount Time

- Kubernetes v1.26: GA Support for Kubelet Credential Providers

- Kubernetes 1.26: Introducing Validating Admission Policies

- Kubernetes 1.26: Device Manager graduates to GA

- Kubernetes 1.26: Non-Graceful Node Shutdown Moves to Beta

- Kubernetes 1.26: Alpha API For Dynamic Resource Allocation

- Kubernetes 1.26: Windows HostProcess Containers Are Generally Available

- Kubernetes 1.26: We're now signing our binary release artifacts!

- Kubernetes v1.26: Electrifying

- Forensic container checkpointing in Kubernetes

- Finding suspicious syscalls with the seccomp notifier

- Boosting Kubernetes container runtime observability with OpenTelemetry

- registry.k8s.io: faster, cheaper and Generally Available (GA)

- Kubernetes Removals, Deprecations, and Major Changes in 1.26

- Live and let live with Kluctl and Server Side Apply

- Server Side Apply Is Great And You Should Be Using It

- Current State: 2019 Third Party Security Audit of Kubernetes

- Introducing Kueue

- Kubernetes 1.25: alpha support for running Pods with user namespaces

- Enforce CRD Immutability with CEL Transition Rules

- Kubernetes 1.25: Kubernetes In-Tree to CSI Volume Migration Status Update

- Kubernetes 1.25: CustomResourceDefinition Validation Rules Graduate to Beta

- Kubernetes 1.25: Use Secrets for Node-Driven Expansion of CSI Volumes

- Kubernetes 1.25: Local Storage Capacity Isolation Reaches GA

- Kubernetes 1.25: Two Features for Apps Rollouts Graduate to Stable

- Kubernetes 1.25: PodHasNetwork Condition for Pods

- Announcing the Auto-refreshing Official Kubernetes CVE Feed

- Kubernetes 1.25: KMS V2 Improvements

- Kubernetes’s IPTables Chains Are Not API

- Introducing COSI: Object Storage Management using Kubernetes APIs

- Kubernetes 1.25: cgroup v2 graduates to GA

- Kubernetes 1.25: CSI Inline Volumes have graduated to GA

- Kubernetes v1.25: Pod Security Admission Controller in Stable

- PodSecurityPolicy: The Historical Context

- Kubernetes v1.25: Combiner

- Spotlight on SIG Storage

- Stargazing, solutions and staycations: the Kubernetes 1.24 release interview

- Meet Our Contributors - APAC (China region)

- Enhancing Kubernetes one KEP at a Time

- Kubernetes Removals and Major Changes In 1.25

- Spotlight on SIG Docs

- Kubernetes Gateway API Graduates to Beta

- Annual Report Summary 2021

- Kubernetes 1.24: Maximum Unavailable Replicas for StatefulSet

- Contextual Logging in Kubernetes 1.24

- Kubernetes 1.24: Avoid Collisions Assigning IP Addresses to Services

- Kubernetes 1.24: Introducing Non-Graceful Node Shutdown Alpha

- Kubernetes 1.24: Prevent unauthorised volume mode conversion

- Kubernetes 1.24: Volume Populators Graduate to Beta

- Kubernetes 1.24: gRPC container probes in beta

- Kubernetes 1.24: Storage Capacity Tracking Now Generally Available

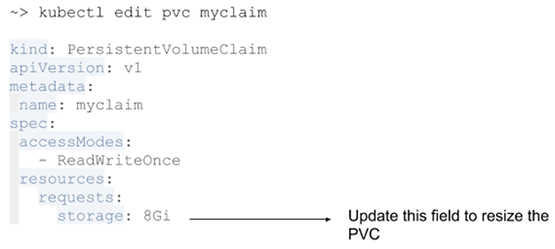

- Kubernetes 1.24: Volume Expansion Now A Stable Feature

- Dockershim: The Historical Context

- Kubernetes 1.24: Stargazer

- Frontiers, fsGroups and frogs: the Kubernetes 1.23 release interview

- Increasing the security bar in Ingress-NGINX v1.2.0

- Kubernetes Removals and Deprecations In 1.24

- Is Your Cluster Ready for v1.24?

- Meet Our Contributors - APAC (Aus-NZ region)

- Updated: Dockershim Removal FAQ

- SIG Node CI Subproject Celebrates Two Years of Test Improvements

- Spotlight on SIG Multicluster

- Securing Admission Controllers

- Meet Our Contributors - APAC (India region)

- Kubernetes is Moving on From Dockershim: Commitments and Next Steps

- Kubernetes-in-Kubernetes and the WEDOS PXE bootable server farm

- Using Admission Controllers to Detect Container Drift at Runtime

- What's new in Security Profiles Operator v0.4.0

- Kubernetes 1.23: StatefulSet PVC Auto-Deletion (alpha)

- Kubernetes 1.23: Prevent PersistentVolume leaks when deleting out of order

- Kubernetes 1.23: Kubernetes In-Tree to CSI Volume Migration Status Update

- Kubernetes 1.23: Pod Security Graduates to Beta

- Kubernetes 1.23: Dual-stack IPv4/IPv6 Networking Reaches GA

- Kubernetes 1.23: The Next Frontier

- Contribution, containers and cricket: the Kubernetes 1.22 release interview

- Quality-of-Service for Memory Resources

- Dockershim removal is coming. Are you ready?

- Non-root Containers And Devices

- Announcing the 2021 Steering Committee Election Results

- Use KPNG to Write Specialized kube-proxiers

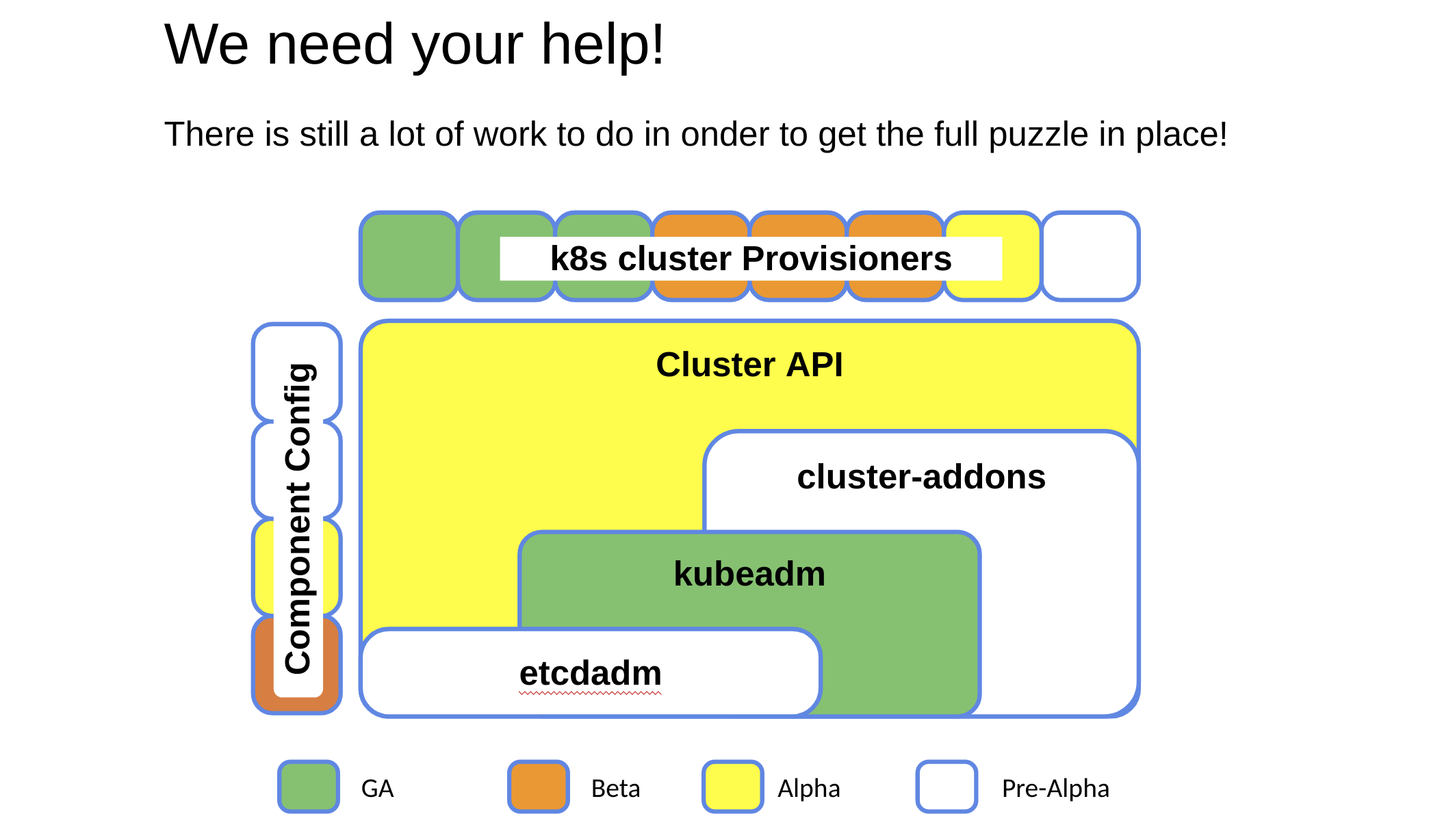

- Introducing ClusterClass and Managed Topologies in Cluster API

- A Closer Look at NSA/CISA Kubernetes Hardening Guidance

- How to Handle Data Duplication in Data-Heavy Kubernetes Environments

- Spotlight on SIG Node

- Introducing Single Pod Access Mode for PersistentVolumes

- Alpha in Kubernetes v1.22: API Server Tracing

- Kubernetes 1.22: A New Design for Volume Populators

- Minimum Ready Seconds for StatefulSets

- Enable seccomp for all workloads with a new v1.22 alpha feature

- Alpha in v1.22: Windows HostProcess Containers

- Kubernetes Memory Manager moves to beta

- Kubernetes 1.22: CSI Windows Support (with CSI Proxy) reaches GA

- New in Kubernetes v1.22: alpha support for using swap memory

- Kubernetes 1.22: Server Side Apply moves to GA

- Kubernetes 1.22: Reaching New Peaks

- Roorkee robots, releases and racing: the Kubernetes 1.21 release interview

- Updating NGINX-Ingress to use the stable Ingress API

- Kubernetes Release Cadence Change: Here’s What You Need To Know

- Spotlight on SIG Usability

- Kubernetes API and Feature Removals In 1.22: Here’s What You Need To Know

- Announcing Kubernetes Community Group Annual Reports

- Writing a Controller for Pod Labels

- Using Finalizers to Control Deletion

- Kubernetes 1.21: Metrics Stability hits GA

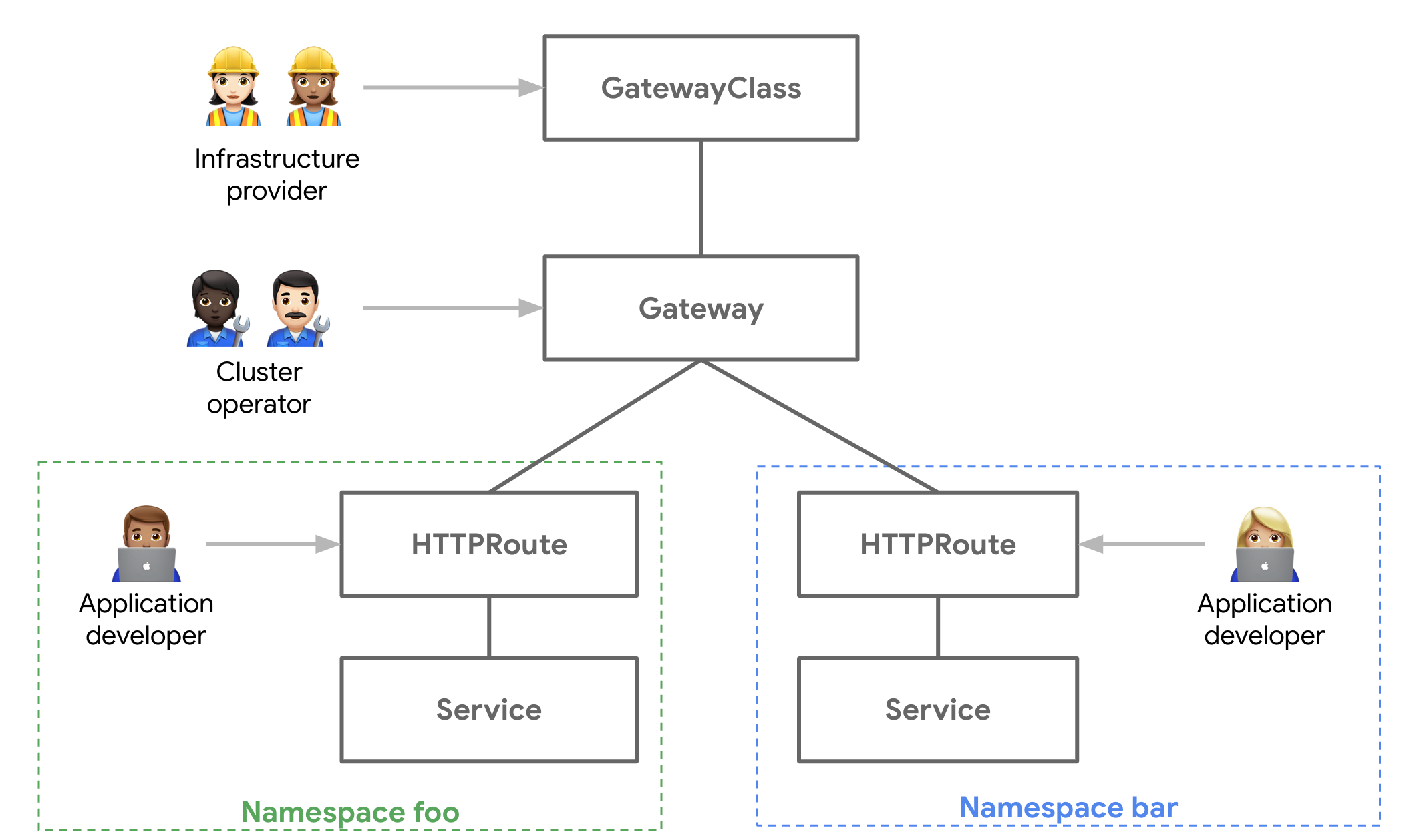

- Evolving Kubernetes networking with the Gateway API

- Graceful Node Shutdown Goes Beta

- Annotating Kubernetes Services for Humans

- Defining Network Policy Conformance for Container Network Interface (CNI) providers

- Introducing Indexed Jobs

- Volume Health Monitoring Alpha Update

- Three Tenancy Models For Kubernetes

- Local Storage: Storage Capacity Tracking, Distributed Provisioning and Generic Ephemeral Volumes hit Beta

- kube-state-metrics goes v2.0

- Introducing Suspended Jobs

- Kubernetes 1.21: CronJob Reaches GA

- Kubernetes 1.21: Power to the Community

- PodSecurityPolicy Deprecation: Past, Present, and Future

- The Evolution of Kubernetes Dashboard

- A Custom Kubernetes Scheduler to Orchestrate Highly Available Applications

- Kubernetes 1.20: Pod Impersonation and Short-lived Volumes in CSI Drivers

- Third Party Device Metrics Reaches GA

- Kubernetes 1.20: Granular Control of Volume Permission Changes

- Kubernetes 1.20: Kubernetes Volume Snapshot Moves to GA

- Kubernetes 1.20: The Raddest Release

- GSoD 2020: Improving the API Reference Experience

- Dockershim Deprecation FAQ

- Don't Panic: Kubernetes and Docker

- Cloud native security for your clusters

- Remembering Dan Kohn

- Announcing the 2020 Steering Committee Election Results

- Contributing to the Development Guide

- GSoC 2020 - Building operators for cluster addons

- Introducing Structured Logs

- Warning: Helpful Warnings Ahead

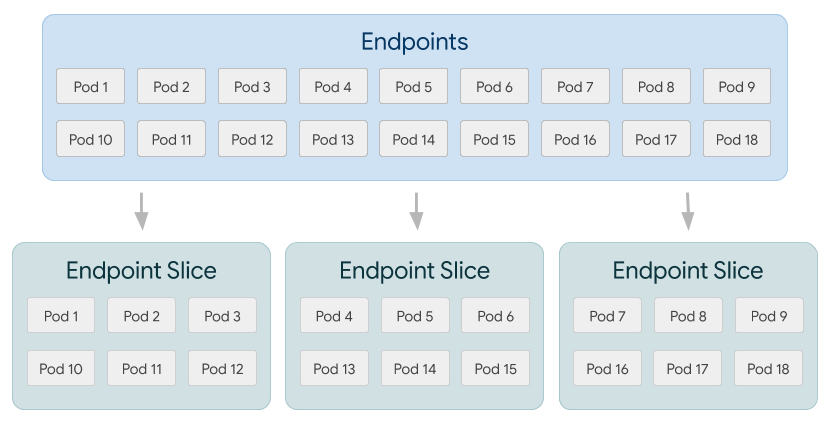

- Scaling Kubernetes Networking With EndpointSlices

- Ephemeral volumes with storage capacity tracking: EmptyDir on steroids

- Increasing the Kubernetes Support Window to One Year

- Kubernetes 1.19: Accentuate the Paw-sitive

- Moving Forward From Beta

- Introducing Hierarchical Namespaces

- Physics, politics and Pull Requests: the Kubernetes 1.18 release interview

- Music and math: the Kubernetes 1.17 release interview

- SIG-Windows Spotlight

- Working with Terraform and Kubernetes

- A Better Docs UX With Docsy

- Supporting the Evolving Ingress Specification in Kubernetes 1.18

- K8s KPIs with Kuberhealthy

- My exciting journey into Kubernetes’ history

- An Introduction to the K8s-Infrastructure Working Group

- WSL+Docker: Kubernetes on the Windows Desktop

- How Docs Handle Third Party and Dual Sourced Content

- Introducing PodTopologySpread

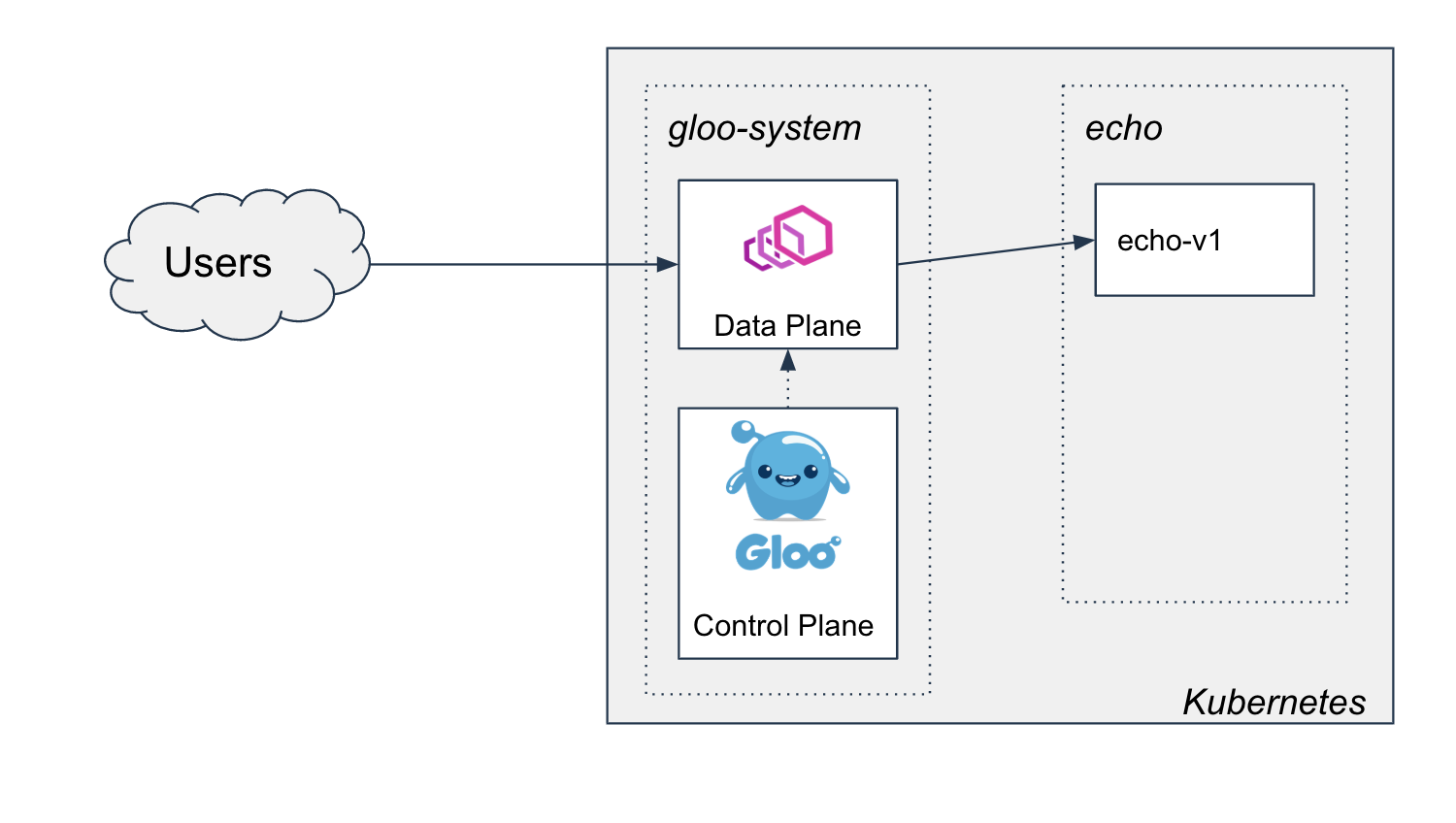

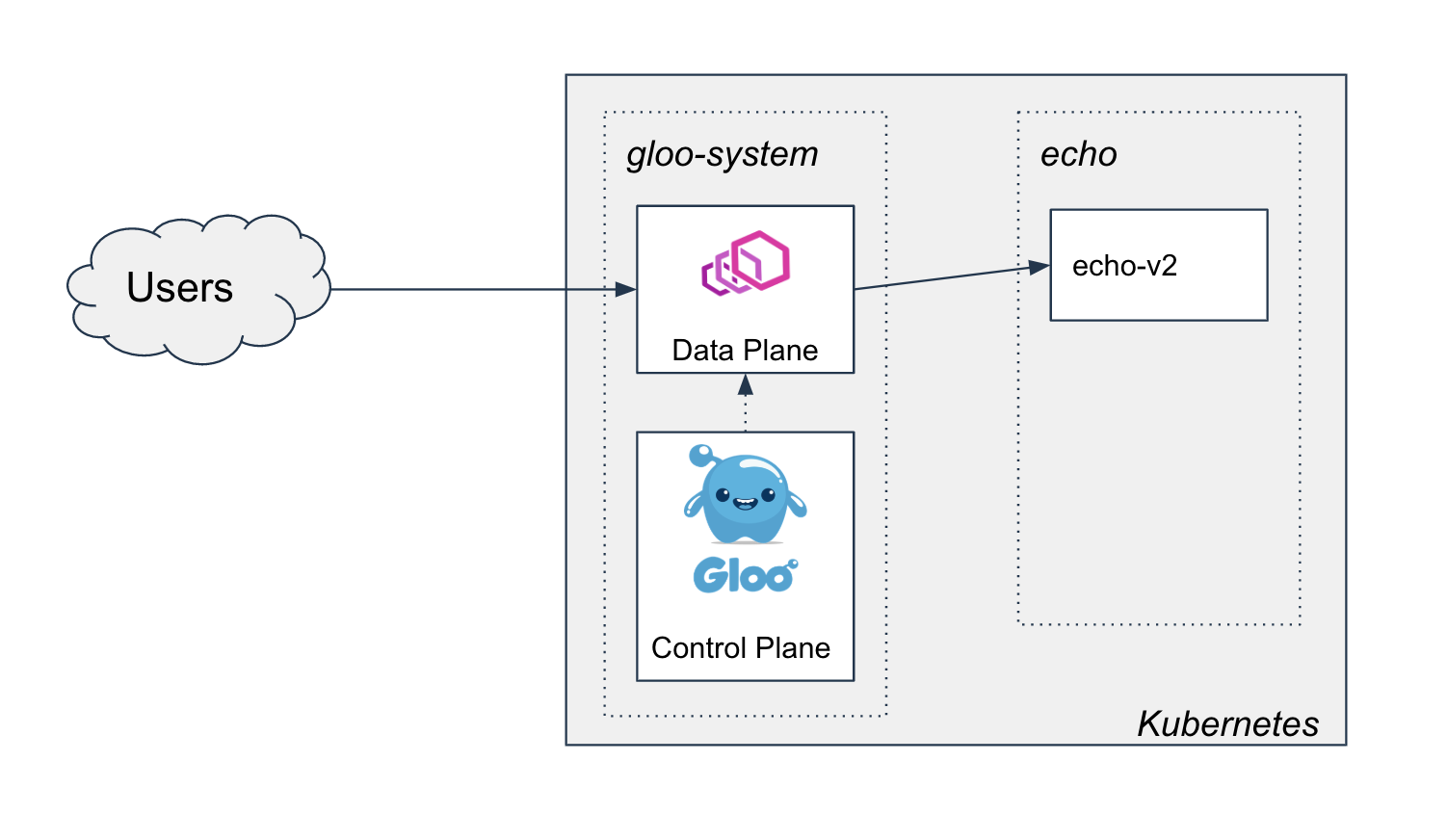

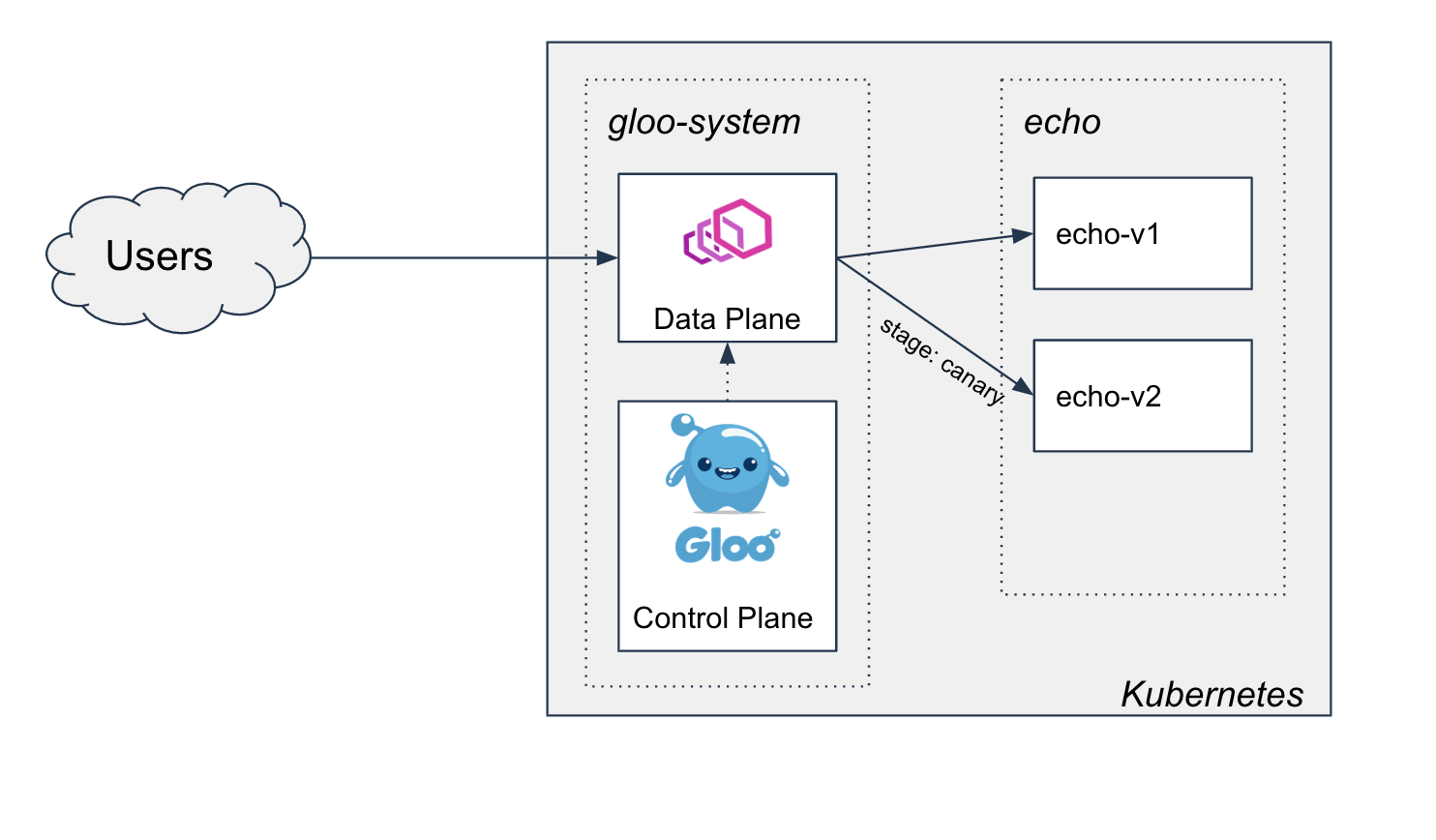

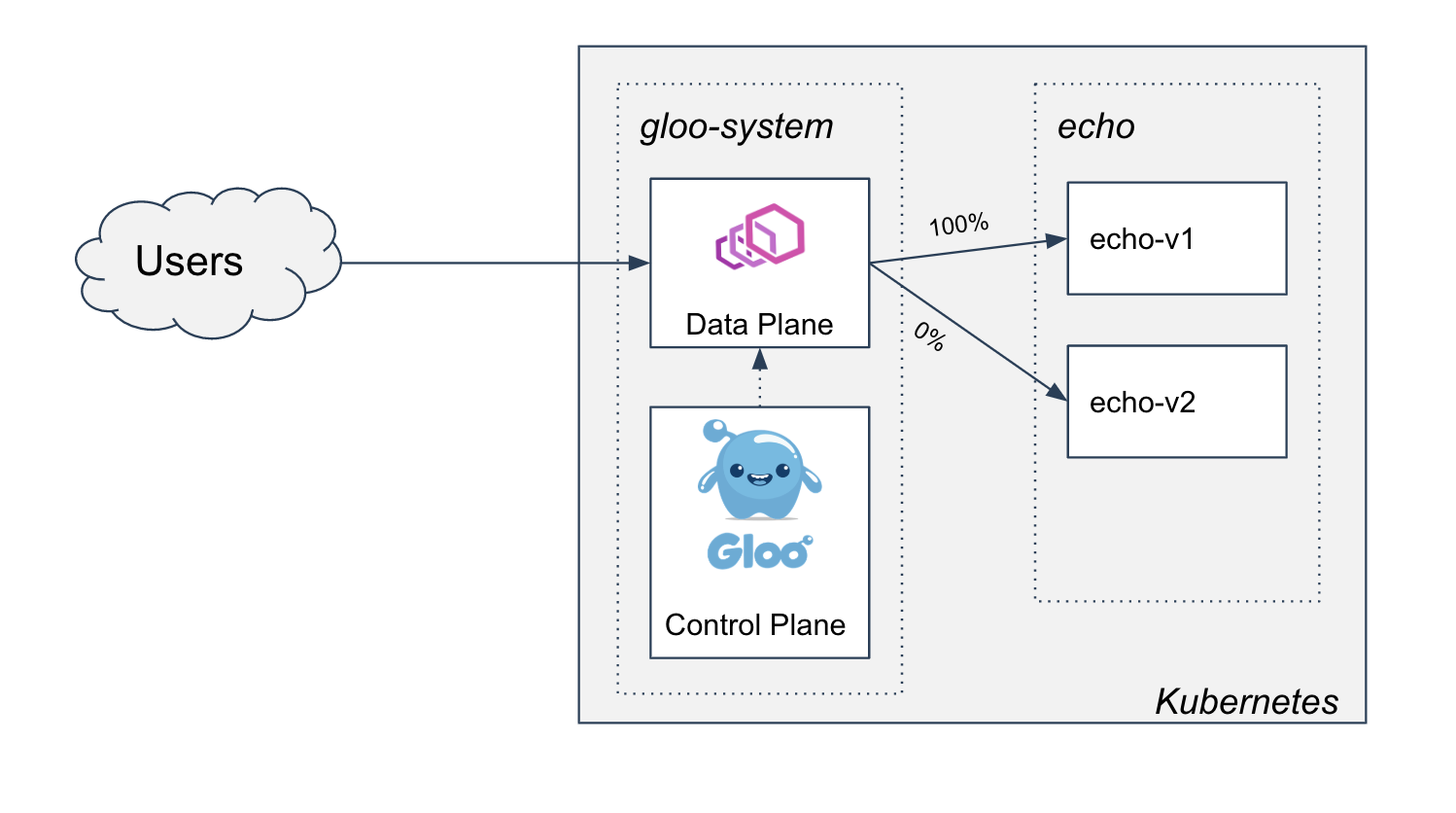

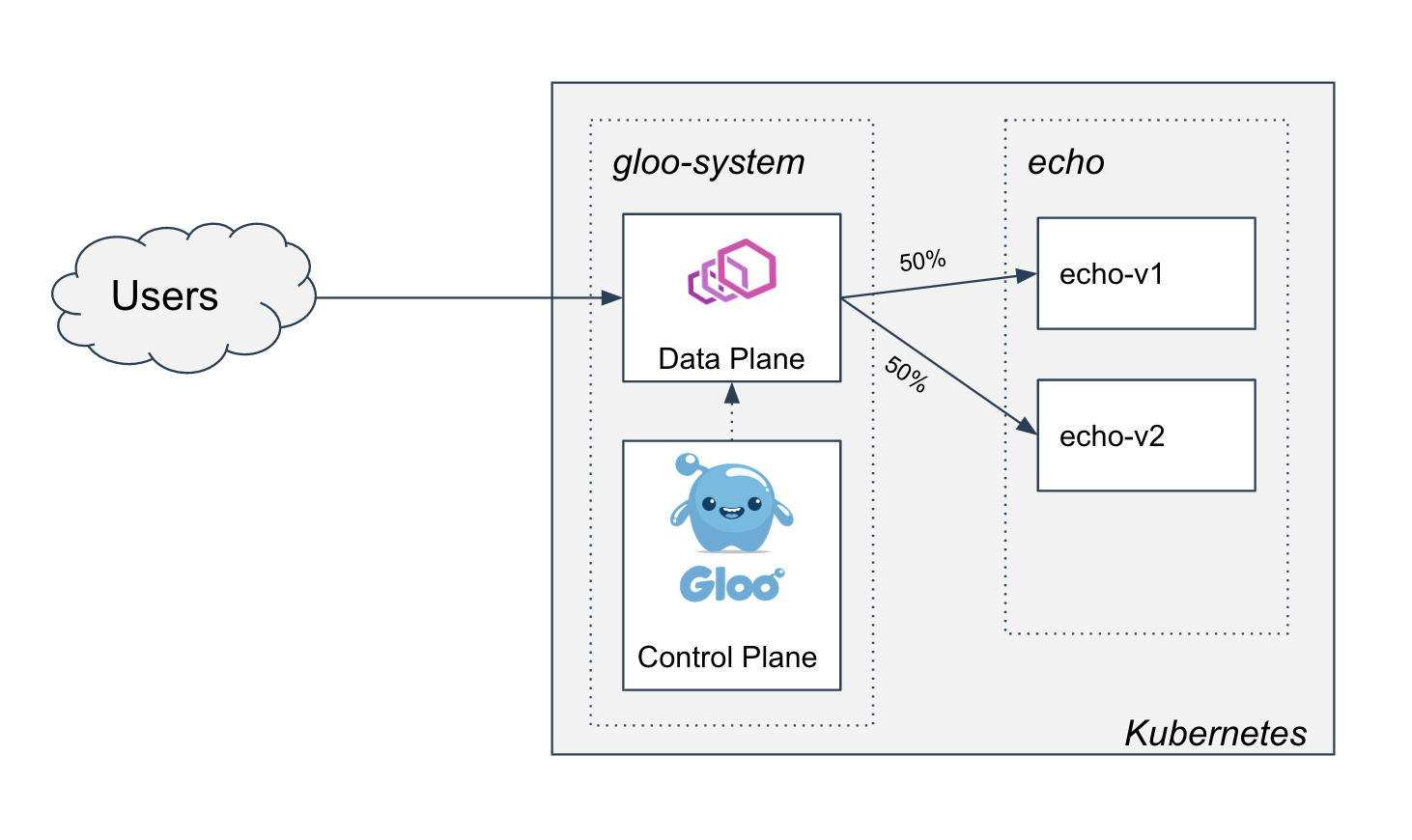

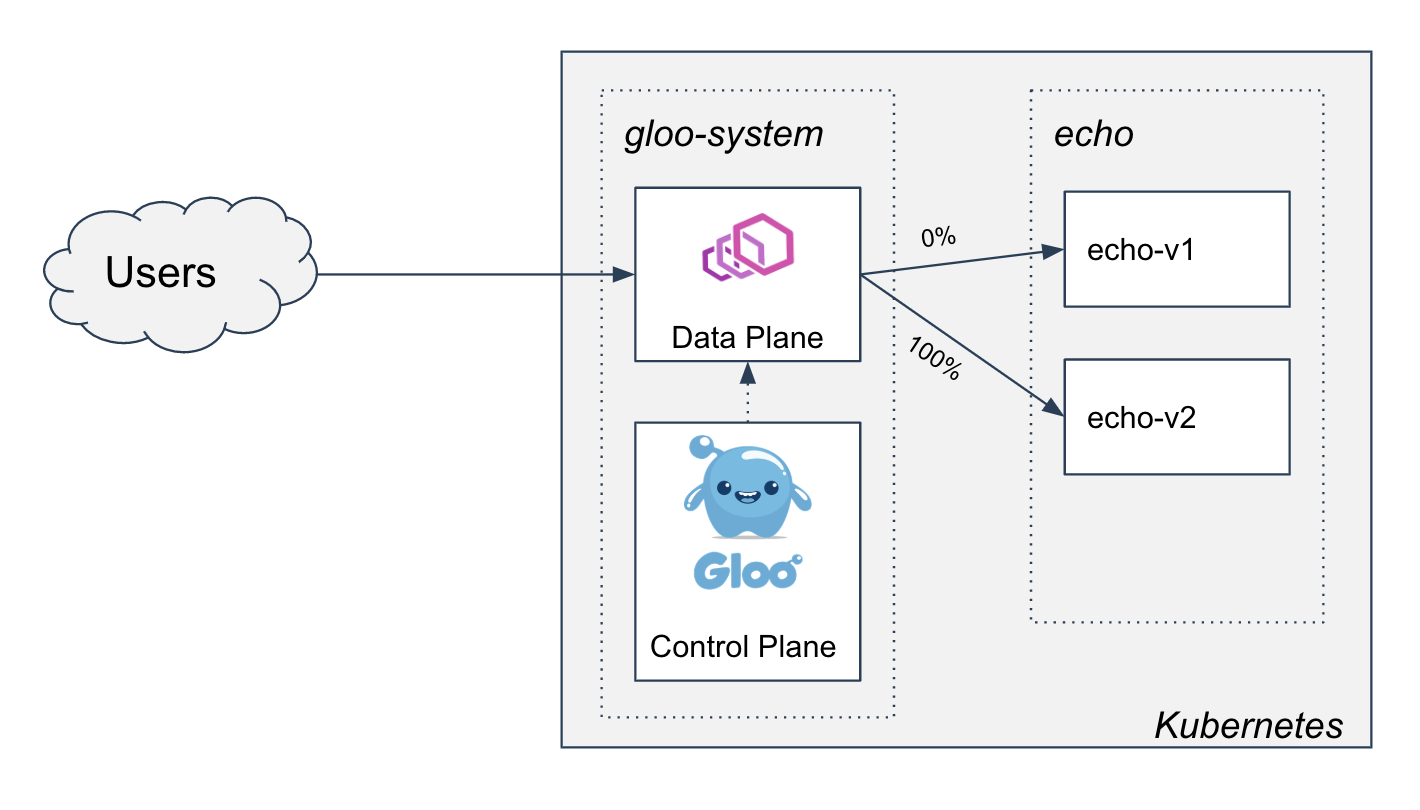

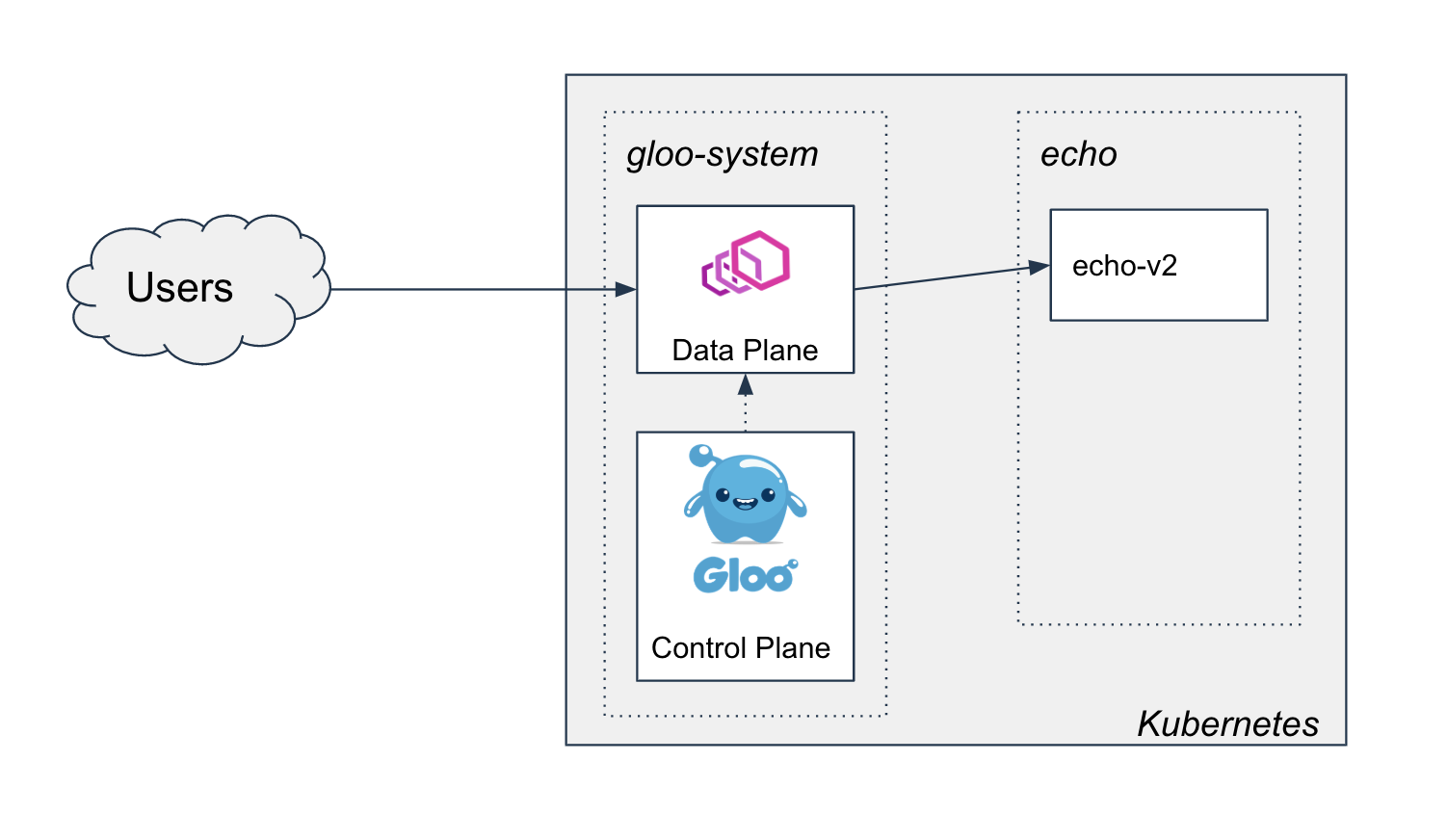

- Two-phased Canary Rollout with Open Source Gloo

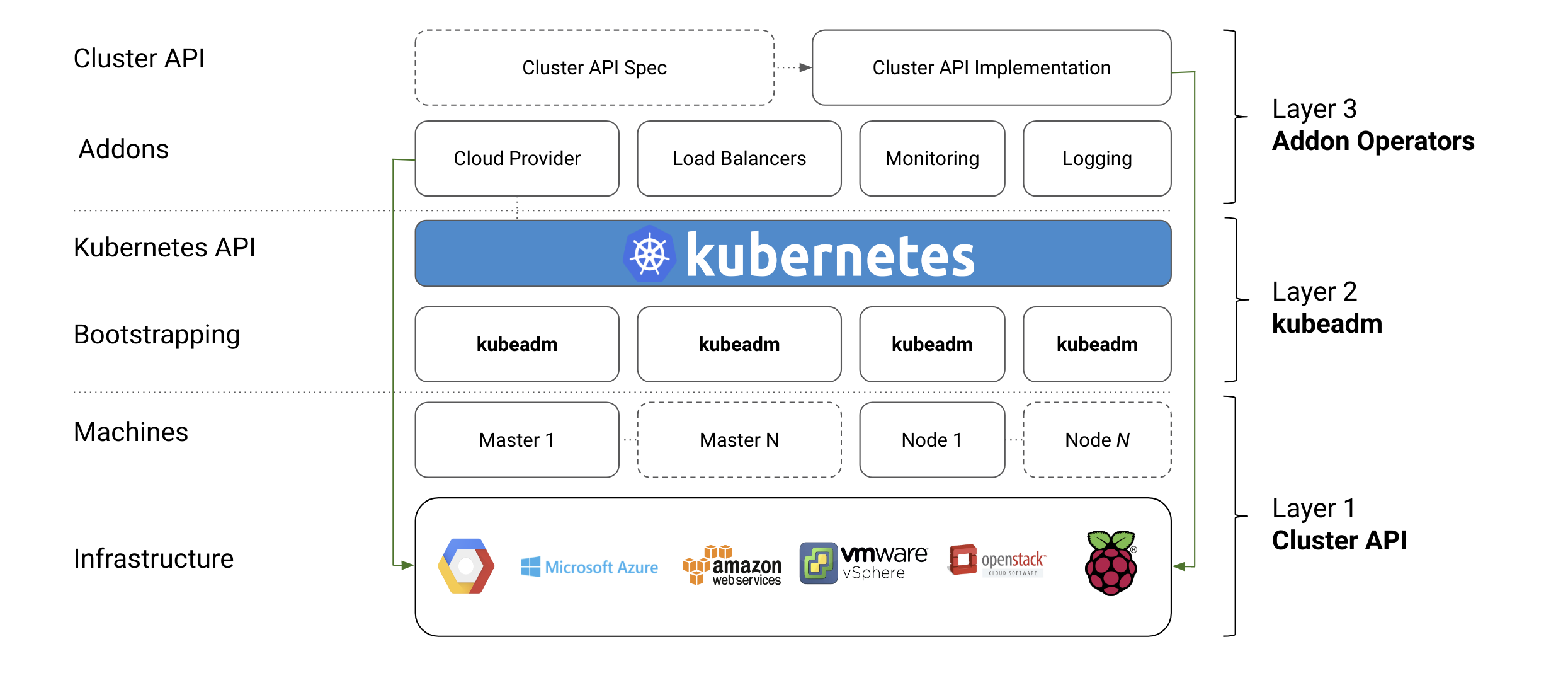

- Cluster API v1alpha3 Delivers New Features and an Improved User Experience

- How Kubernetes contributors are building a better communication process

- API Priority and Fairness Alpha

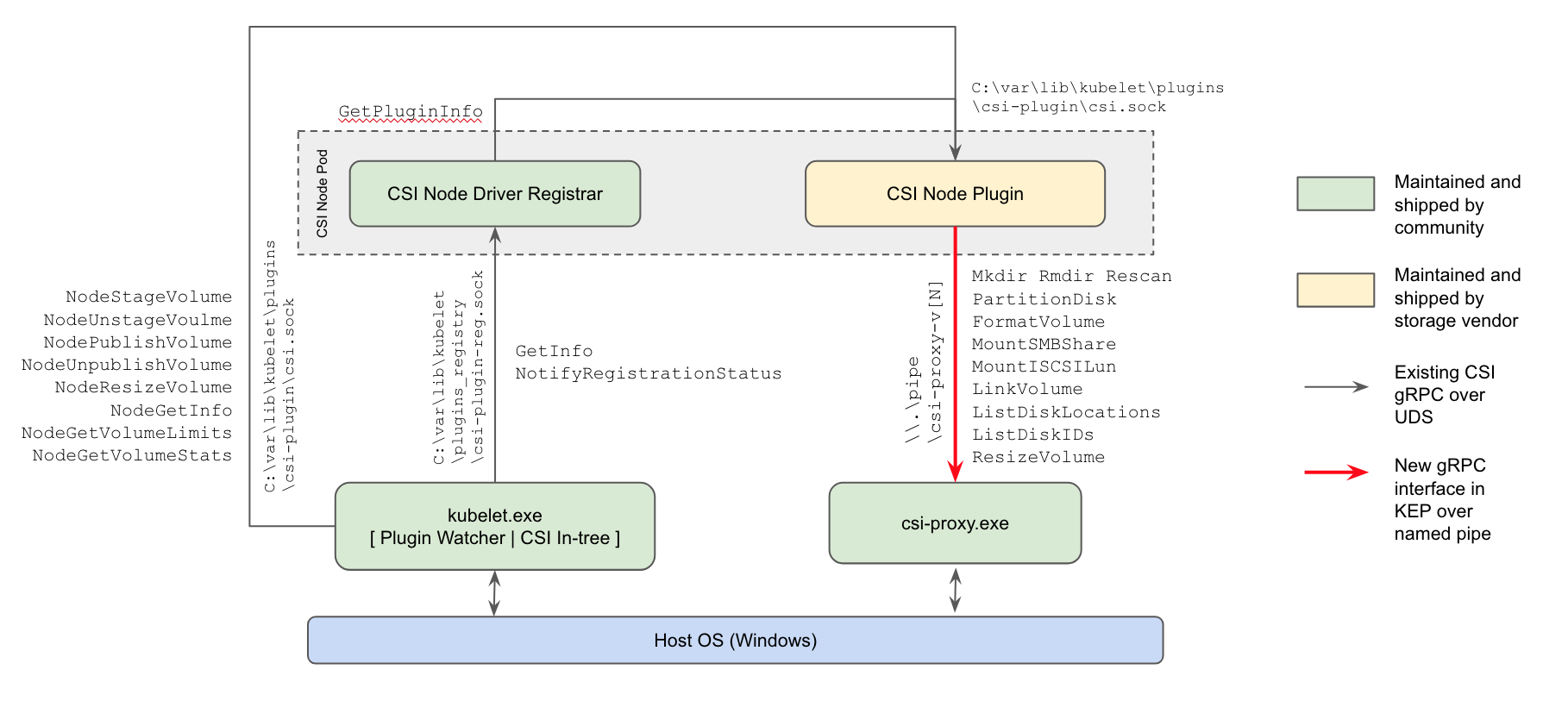

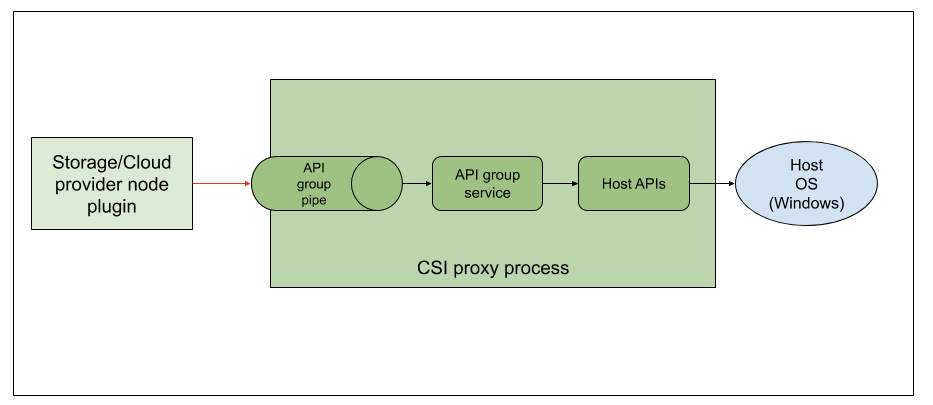

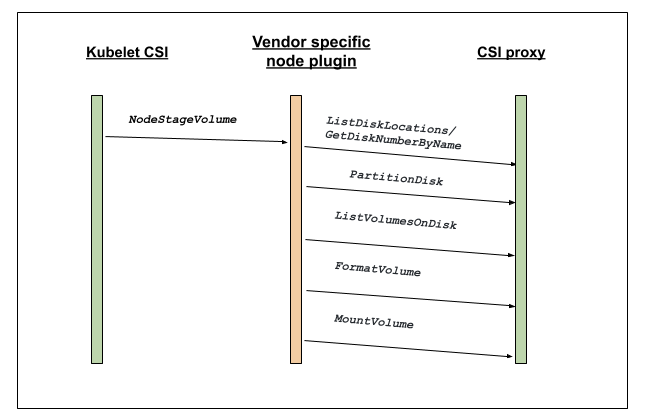

- Introducing Windows CSI support alpha for Kubernetes

- Improvements to the Ingress API in Kubernetes 1.18

- Kubernetes 1.18 Feature Server-side Apply Beta 2

- Kubernetes Topology Manager Moves to Beta - Align Up!

- Kubernetes 1.18: Fit & Finish

- Join SIG Scalability and Learn Kubernetes the Hard Way

- Kong Ingress Controller and Service Mesh: Setting up Ingress to Istio on Kubernetes

- Contributor Summit Amsterdam Postponed

- Bring your ideas to the world with kubectl plugins

- Contributor Summit Amsterdam Schedule Announced

- Deploying External OpenStack Cloud Provider with Kubeadm

- KubeInvaders - Gamified Chaos Engineering Tool for Kubernetes

- CSI Ephemeral Inline Volumes

- Reviewing 2019 in Docs

- Kubernetes on MIPS

- Announcing the Kubernetes bug bounty program

- Remembering Brad Childs

- Testing of CSI drivers

- Kubernetes 1.17: Stability

- Kubernetes 1.17 Feature: Kubernetes Volume Snapshot Moves to Beta

- Kubernetes 1.17 Feature: Kubernetes In-Tree to CSI Volume Migration Moves to Beta

- When you're in the release team, you're family: the Kubernetes 1.16 release interview

- Gardener Project Update

- Develop a Kubernetes controller in Java

- Running Kubernetes locally on Linux with Microk8s

- Grokkin' the Docs

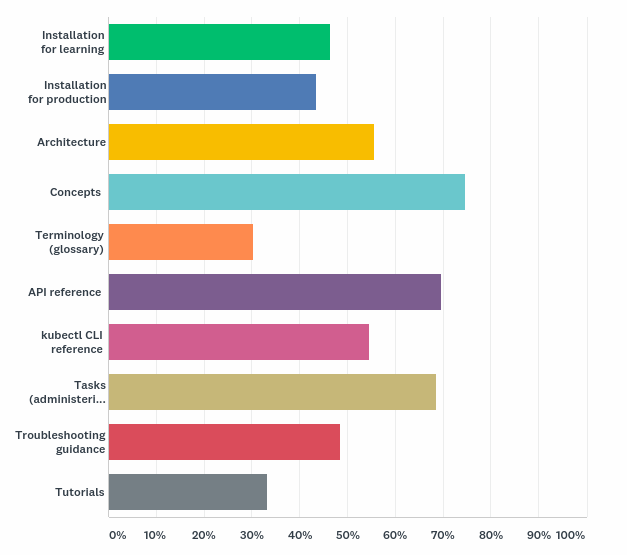

- Kubernetes Documentation Survey

- Contributor Summit San Diego Schedule Announced!

- 2019 Steering Committee Election Results

- Contributor Summit San Diego Registration Open!

- Kubernetes 1.16: Custom Resources, Overhauled Metrics, and Volume Extensions

- Announcing etcd 3.4

- OPA Gatekeeper: Policy and Governance for Kubernetes

- Get started with Kubernetes (using Python)

- Deprecated APIs Removed In 1.16: Here’s What You Need To Know

- Recap of Kubernetes Contributor Summit Barcelona 2019

- Automated High Availability in kubeadm v1.15: Batteries Included But Swappable

- Introducing Volume Cloning Alpha for Kubernetes

- Future of CRDs: Structural Schemas

- Kubernetes 1.15: Extensibility and Continuous Improvement

- Join us at the Contributor Summit in Shanghai

- Kyma - extend and build on Kubernetes with ease

- Kubernetes, Cloud Native, and the Future of Software

- Expanding our Contributor Workshops

- Cat shirts and Groundhog Day: the Kubernetes 1.14 release interview

- Join us for the 2019 KubeCon Diversity Lunch & Hack

- How You Can Help Localize Kubernetes Docs

- Hardware Accelerated SSL/TLS Termination in Ingress Controllers using Kubernetes Device Plugins and RuntimeClass

- Introducing kube-iptables-tailer: Better Networking Visibility in Kubernetes Clusters

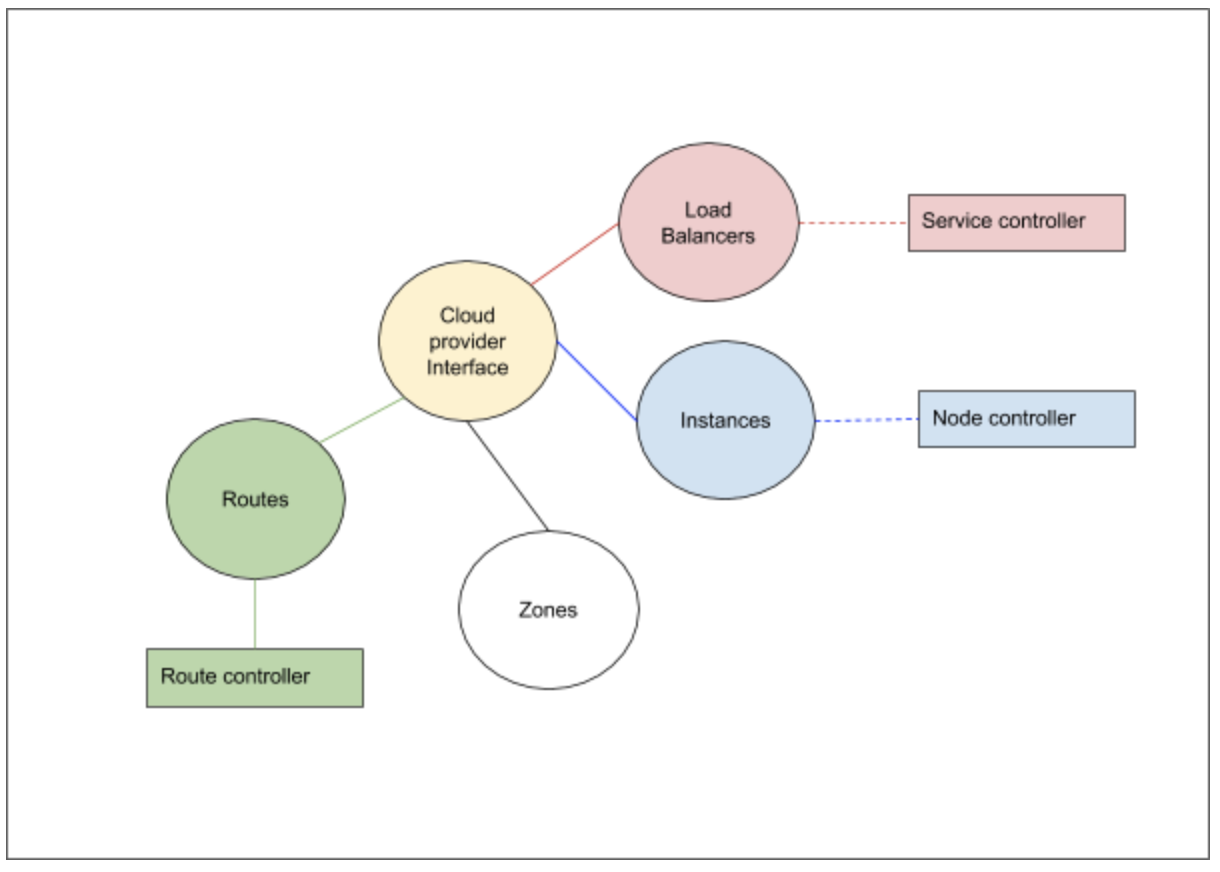

- The Future of Cloud Providers in Kubernetes

- Pod Priority and Preemption in Kubernetes

- Process ID Limiting for Stability Improvements in Kubernetes 1.14

- Kubernetes 1.14: Local Persistent Volumes GA

- Kubernetes v1.14 delivers production-level support for Windows nodes and Windows containers

- kube-proxy Subtleties: Debugging an Intermittent Connection Reset

- Running Kubernetes locally on Linux with Minikube - now with Kubernetes 1.14 support

- Kubernetes 1.14: Production-level support for Windows Nodes, Kubectl Updates, Persistent Local Volumes GA

- Kubernetes End-to-end Testing for Everyone

- A Guide to Kubernetes Admission Controllers

- A Look Back and What's in Store for Kubernetes Contributor Summits

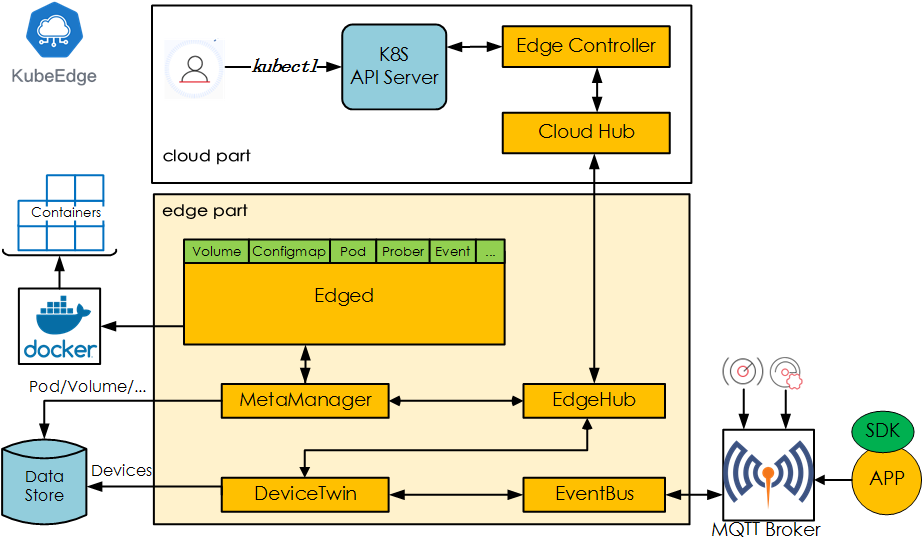

- KubeEdge, a Kubernetes Native Edge Computing Framework

- Kubernetes Setup Using Ansible and Vagrant

- Raw Block Volume support to Beta

- Automate Operations on your Cluster with OperatorHub.io

- Building a Kubernetes Edge (Ingress) Control Plane for Envoy v2

- Runc and CVE-2019-5736

- Poseidon-Firmament Scheduler – Flow Network Graph Based Scheduler

- Update on Volume Snapshot Alpha for Kubernetes

- Container Storage Interface (CSI) for Kubernetes GA

- APIServer dry-run and kubectl diff

- Kubernetes Federation Evolution

- etcd: Current status and future roadmap

- New Contributor Workshop Shanghai

- Production-Ready Kubernetes Cluster Creation with kubeadm

- Kubernetes 1.13: Simplified Cluster Management with Kubeadm, Container Storage Interface (CSI), and CoreDNS as Default DNS are Now Generally Available

- Kubernetes Docs Updates, International Edition

- gRPC Load Balancing on Kubernetes without Tears

- Tips for Your First Kubecon Presentation - Part 2

- Tips for Your First Kubecon Presentation - Part 1

- Kubernetes 2018 North American Contributor Summit

- 2018 Steering Committee Election Results

- Topology-Aware Volume Provisioning in Kubernetes

- Kubernetes v1.12: Introducing RuntimeClass

- Introducing Volume Snapshot Alpha for Kubernetes

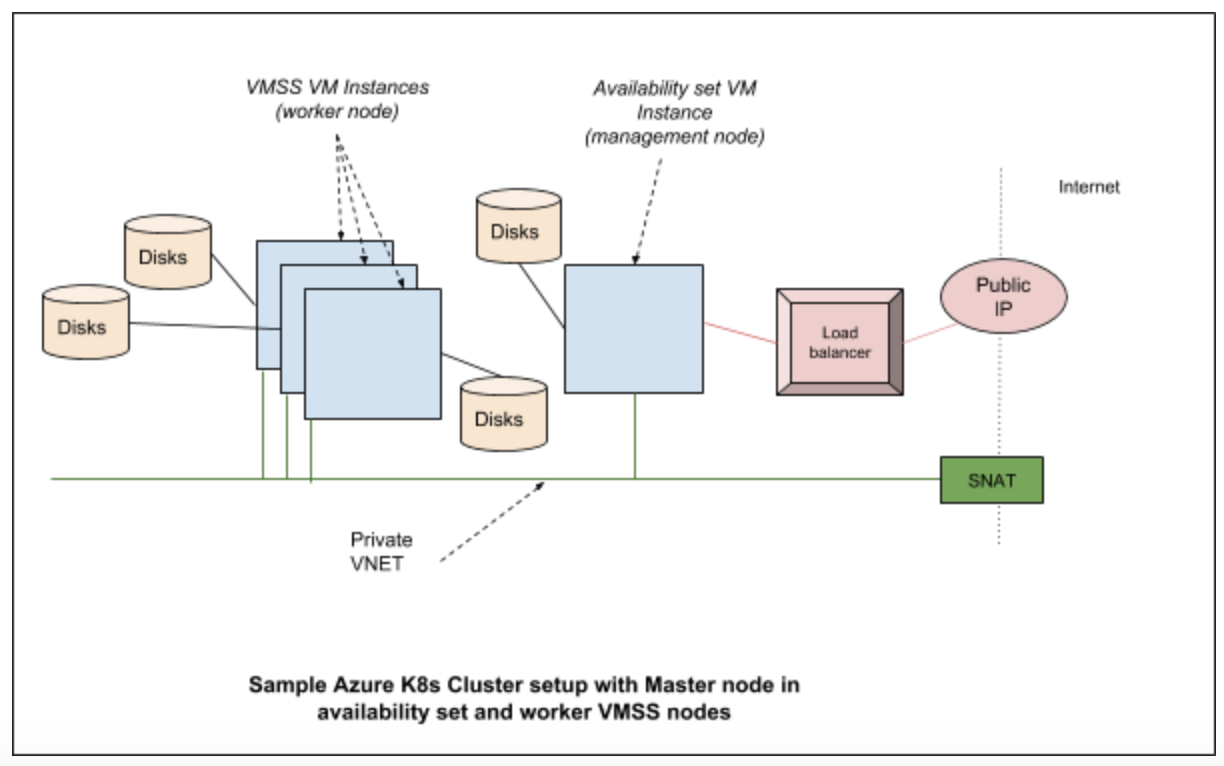

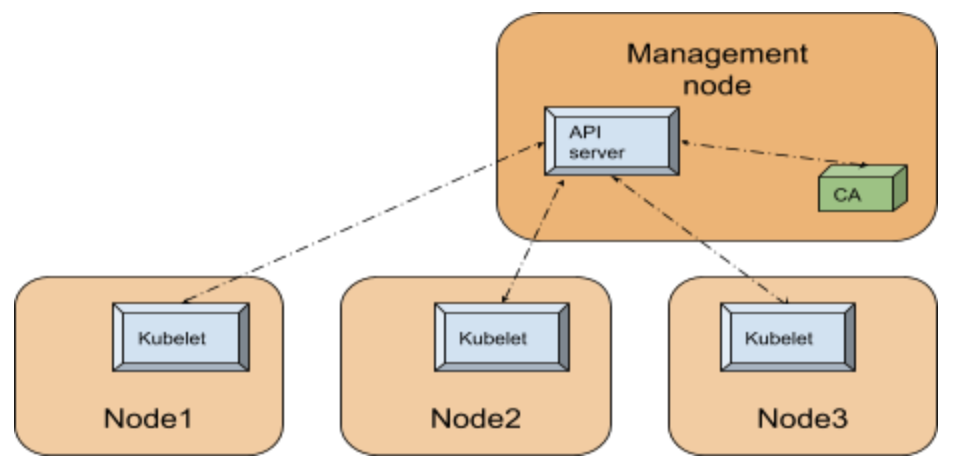

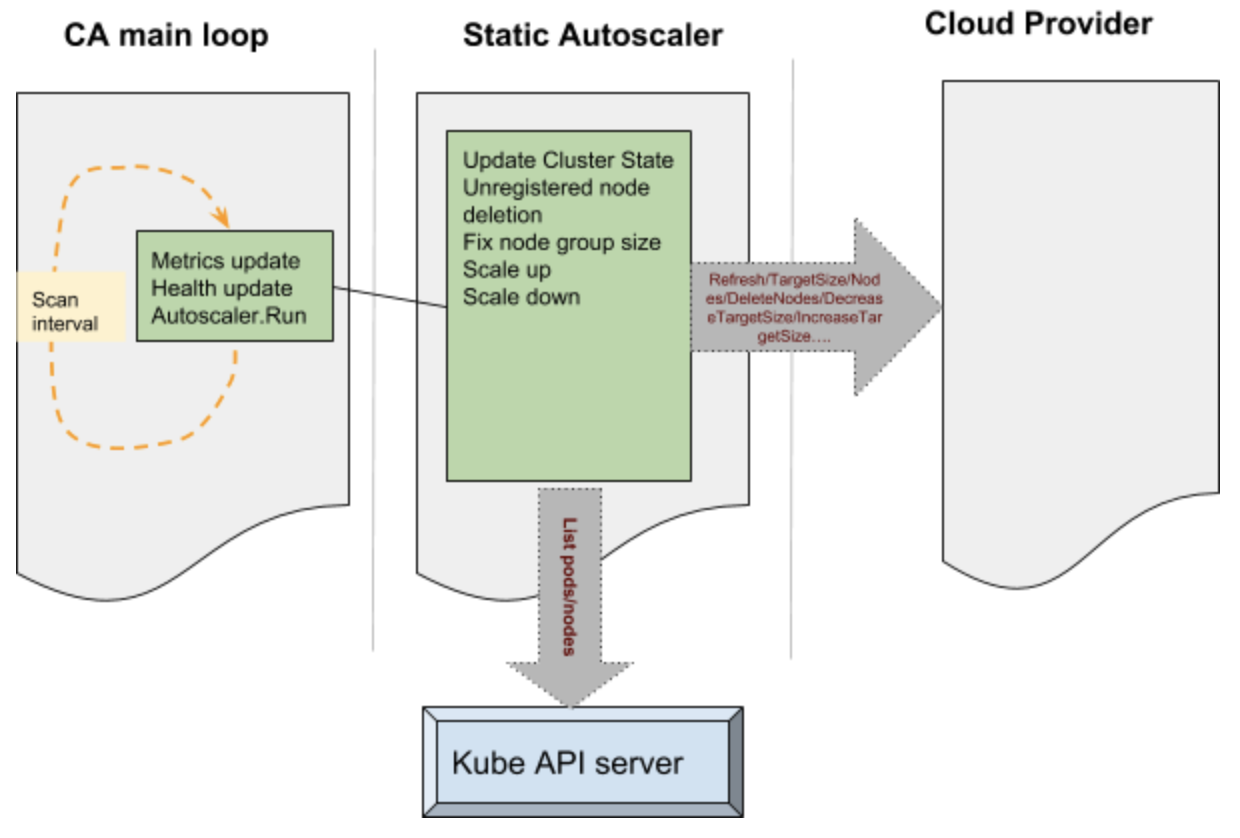

- Support for Azure VMSS, Cluster-Autoscaler and User Assigned Identity

- Introducing the Non-Code Contributor’s Guide

- KubeDirector: The easy way to run complex stateful applications on Kubernetes

- Building a Network Bootable Server Farm for Kubernetes with LTSP

- Health checking gRPC servers on Kubernetes

- Kubernetes 1.12: Kubelet TLS Bootstrap and Azure Virtual Machine Scale Sets (VMSS) Move to General Availability

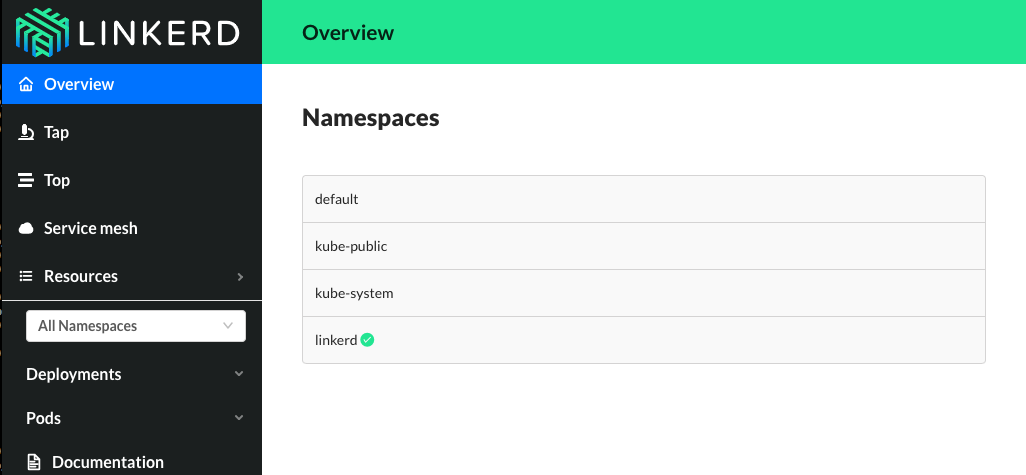

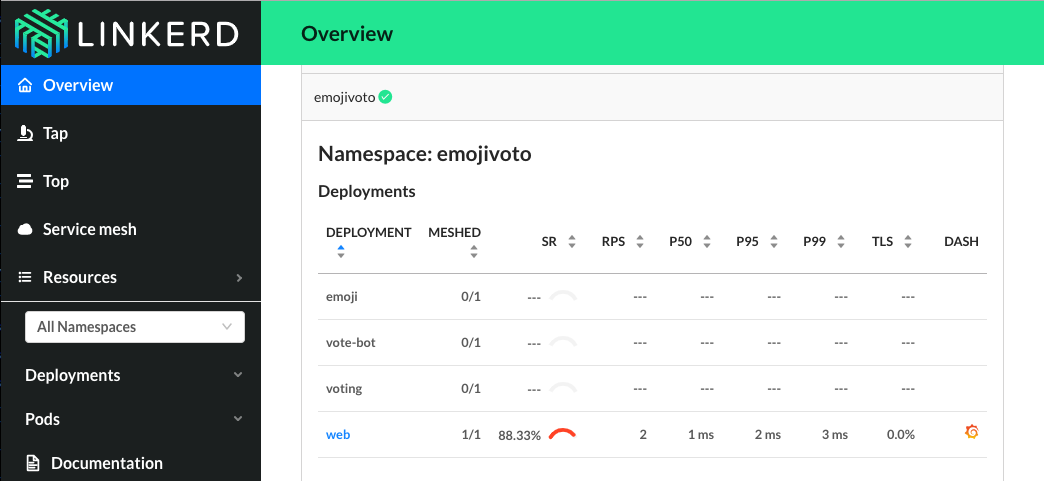

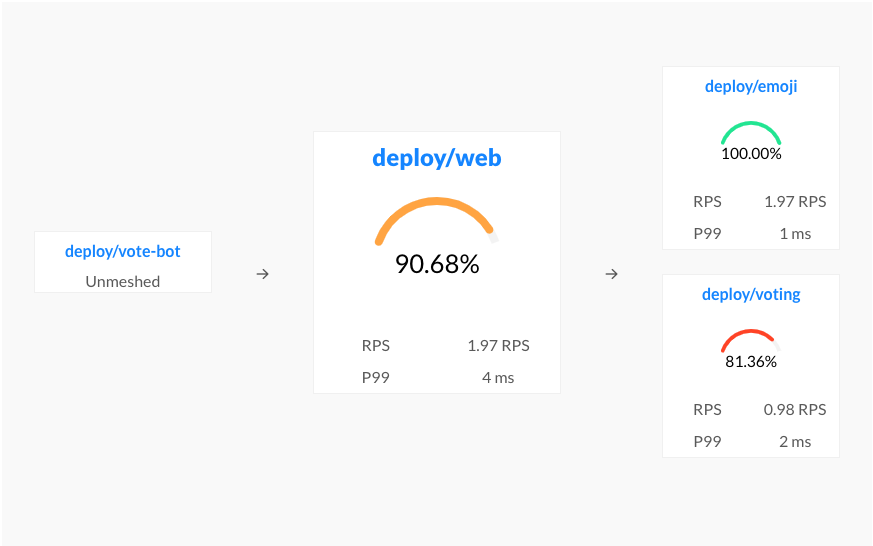

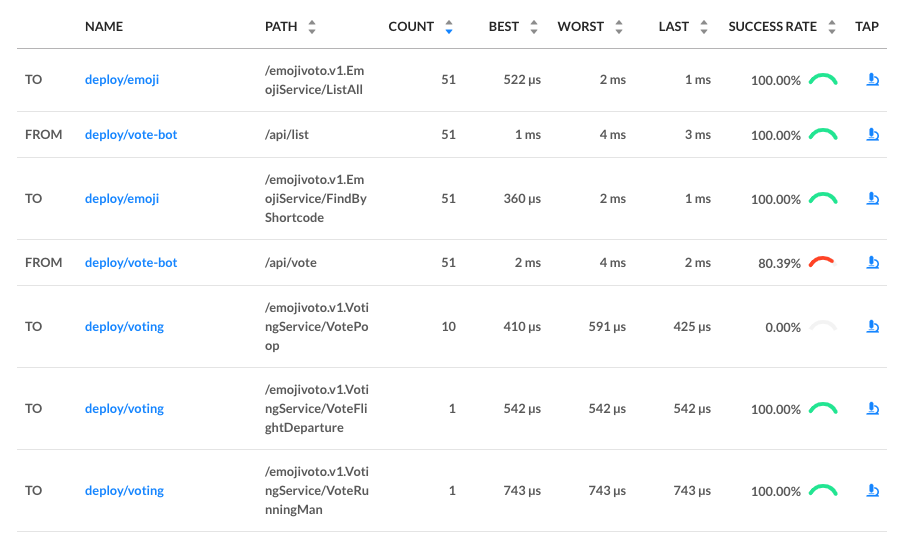

- Hands On With Linkerd 2.0

- 2018 Steering Committee Election Cycle Kicks Off

- The Machines Can Do the Work, a Story of Kubernetes Testing, CI, and Automating the Contributor Experience

- Introducing Kubebuilder: an SDK for building Kubernetes APIs using CRDs

- Out of the Clouds onto the Ground: How to Make Kubernetes Production Grade Anywhere

- Dynamically Expand Volume with CSI and Kubernetes

- KubeVirt: Extending Kubernetes with CRDs for Virtualized Workloads

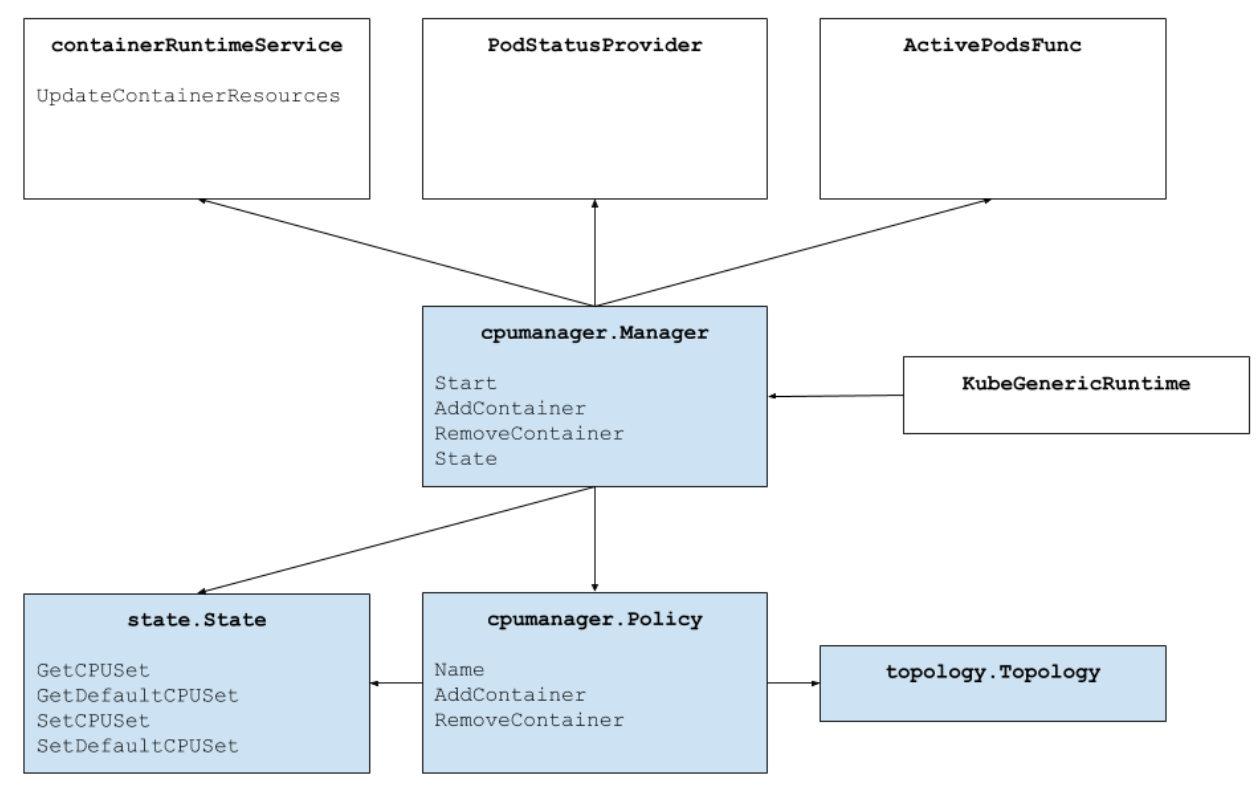

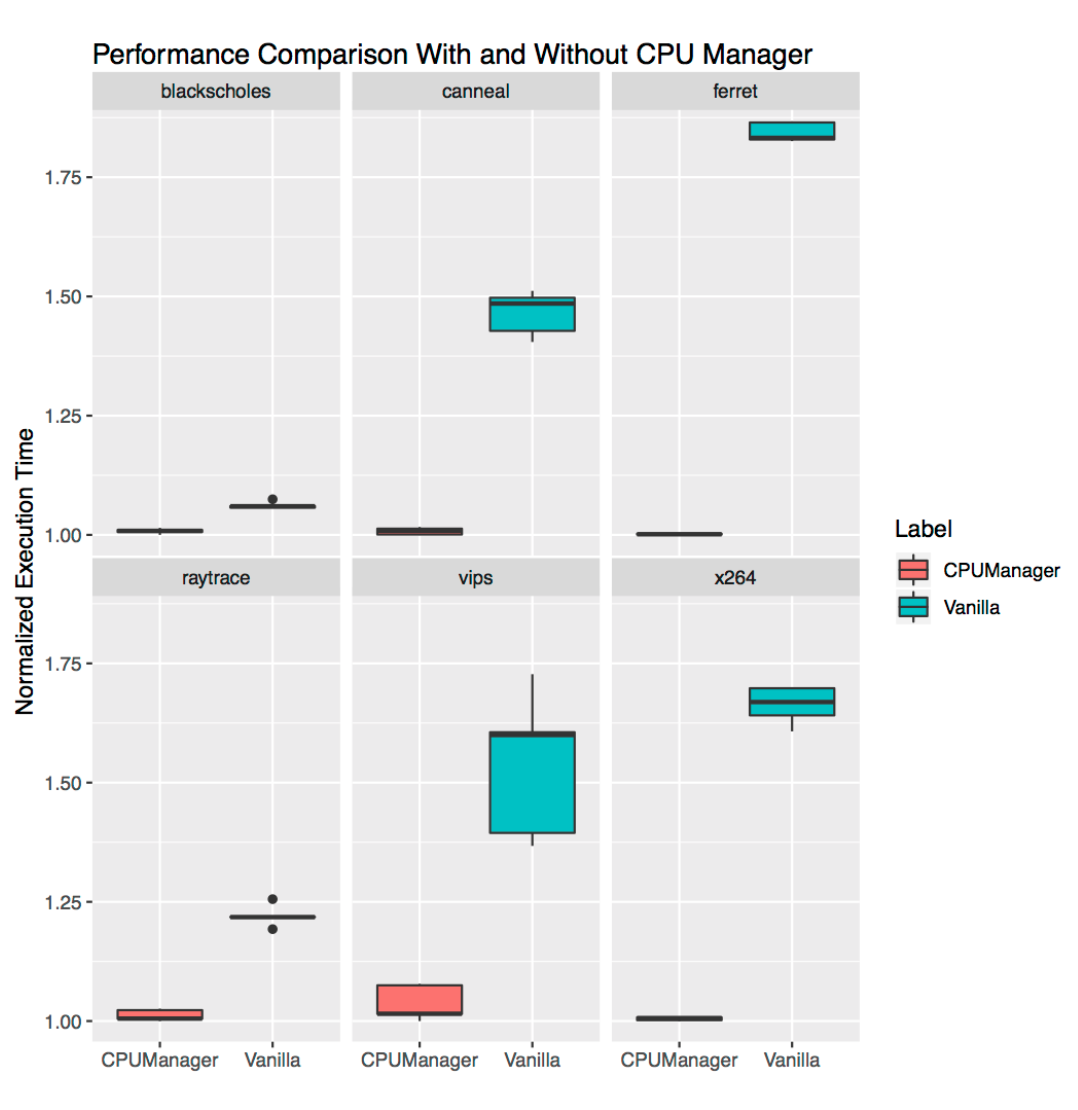

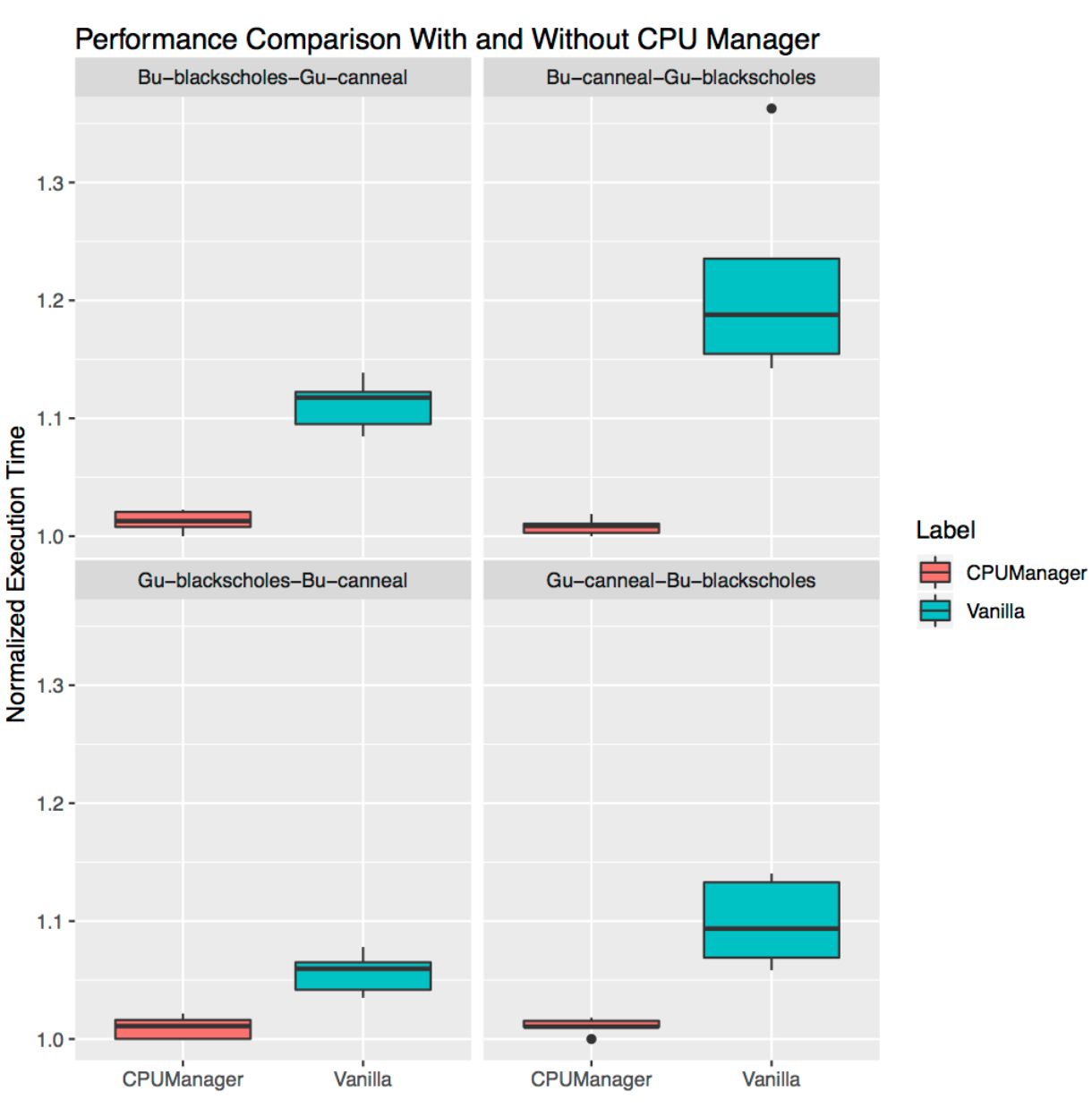

- Feature Highlight: CPU Manager

- The History of Kubernetes & the Community Behind It

- Kubernetes Wins the 2018 OSCON Most Impact Award

- 11 Ways (Not) to Get Hacked

- How the sausage is made: the Kubernetes 1.11 release interview, from the Kubernetes Podcast

- Resizing Persistent Volumes using Kubernetes

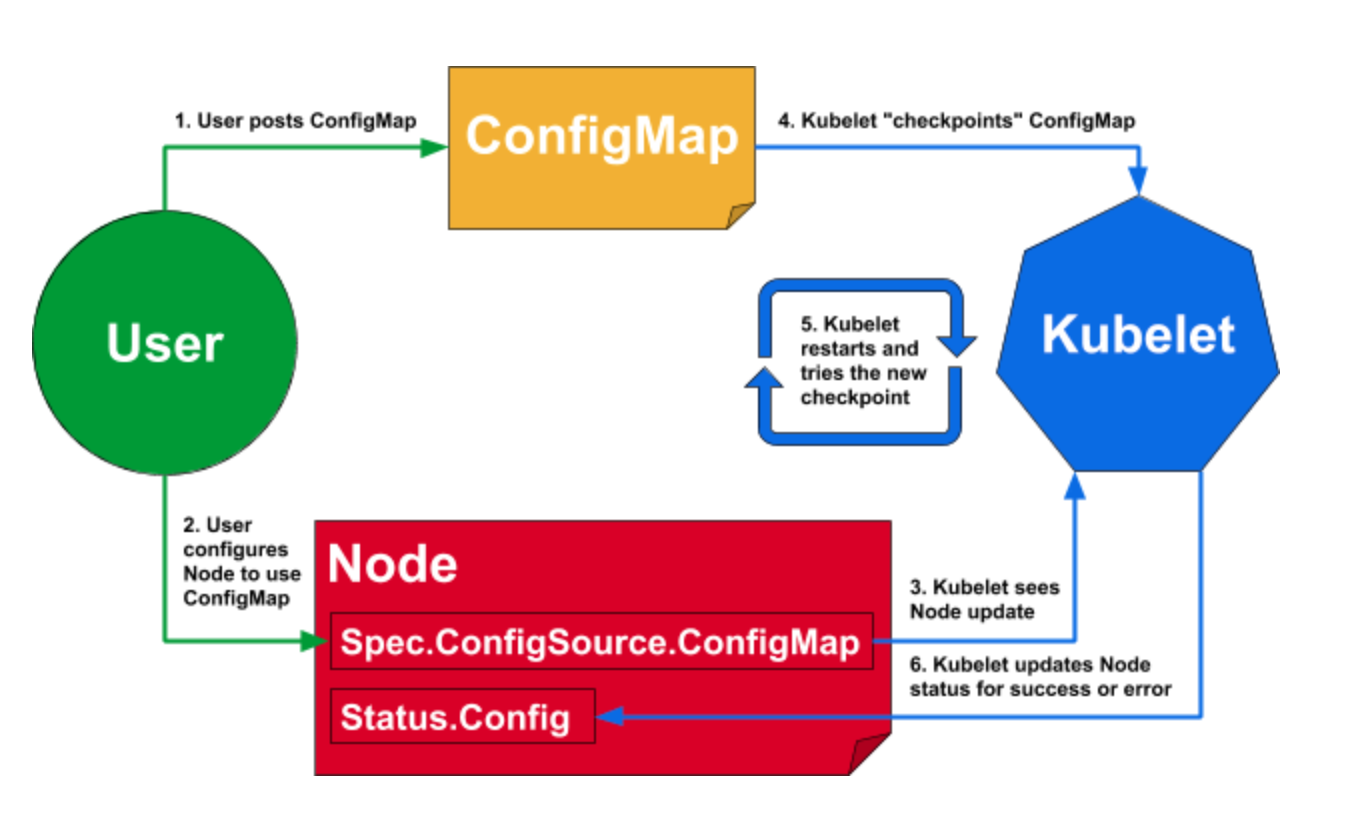

- Dynamic Kubelet Configuration

- CoreDNS GA for Kubernetes Cluster DNS

- Meet Our Contributors - Monthly Streaming YouTube Mentoring Series

- IPVS-Based In-Cluster Load Balancing Deep Dive

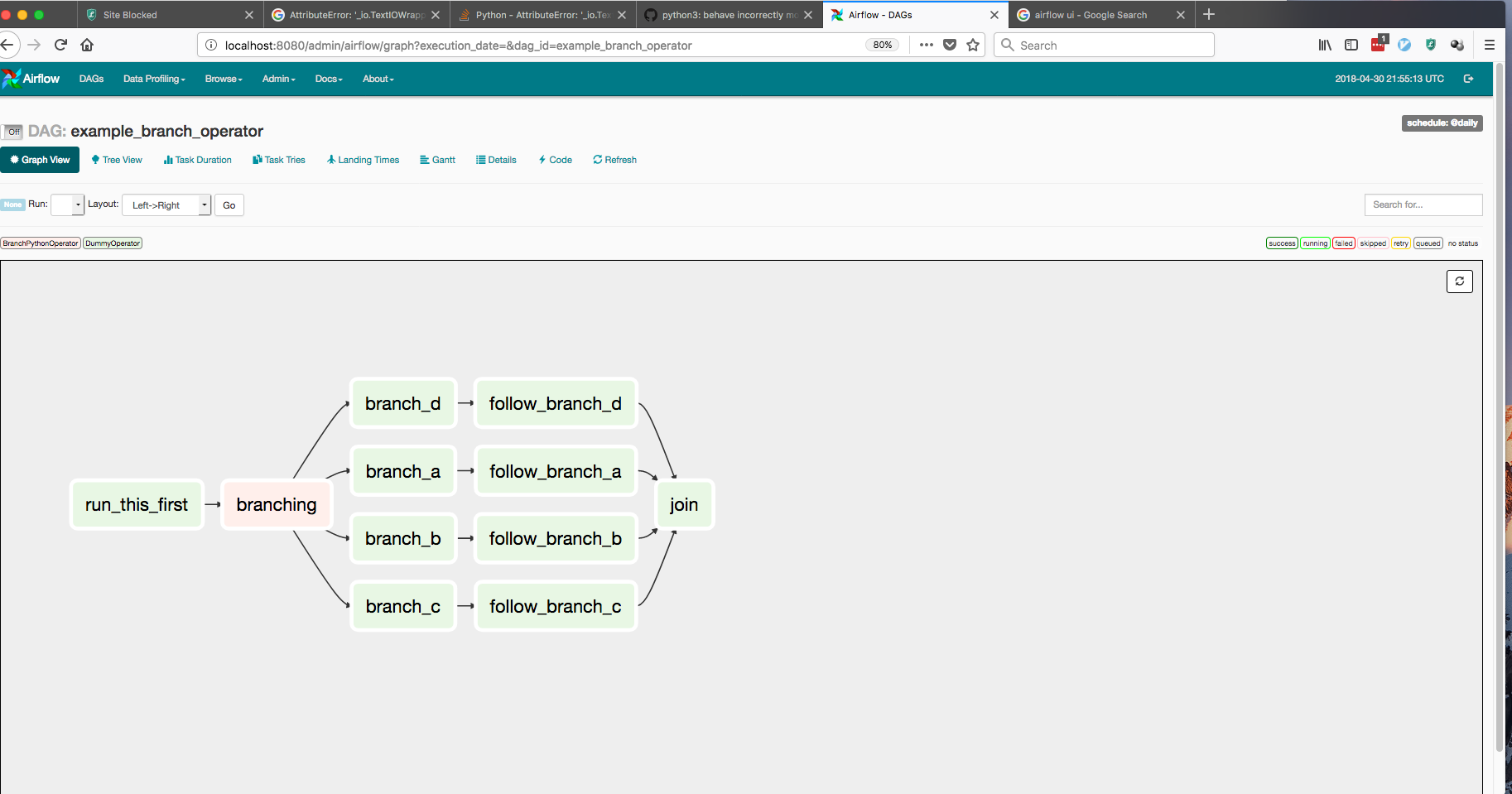

- Airflow on Kubernetes (Part 1): A Different Kind of Operator

- Kubernetes 1.11: In-Cluster Load Balancing and CoreDNS Plugin Graduate to General Availability

- Dynamic Ingress in Kubernetes

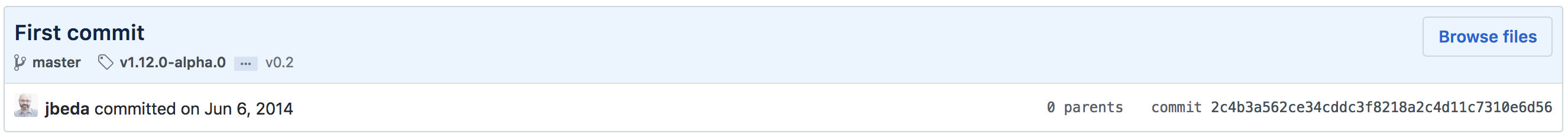

- 4 Years of K8s

- Say Hello to Discuss Kubernetes

- Introducing kustomize; Template-free Configuration Customization for Kubernetes

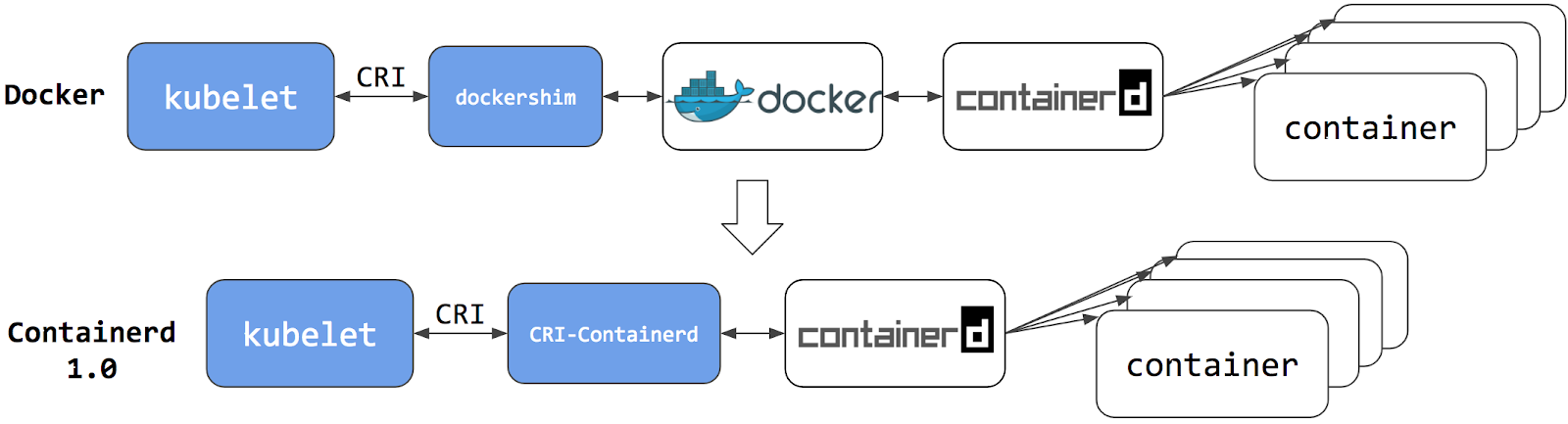

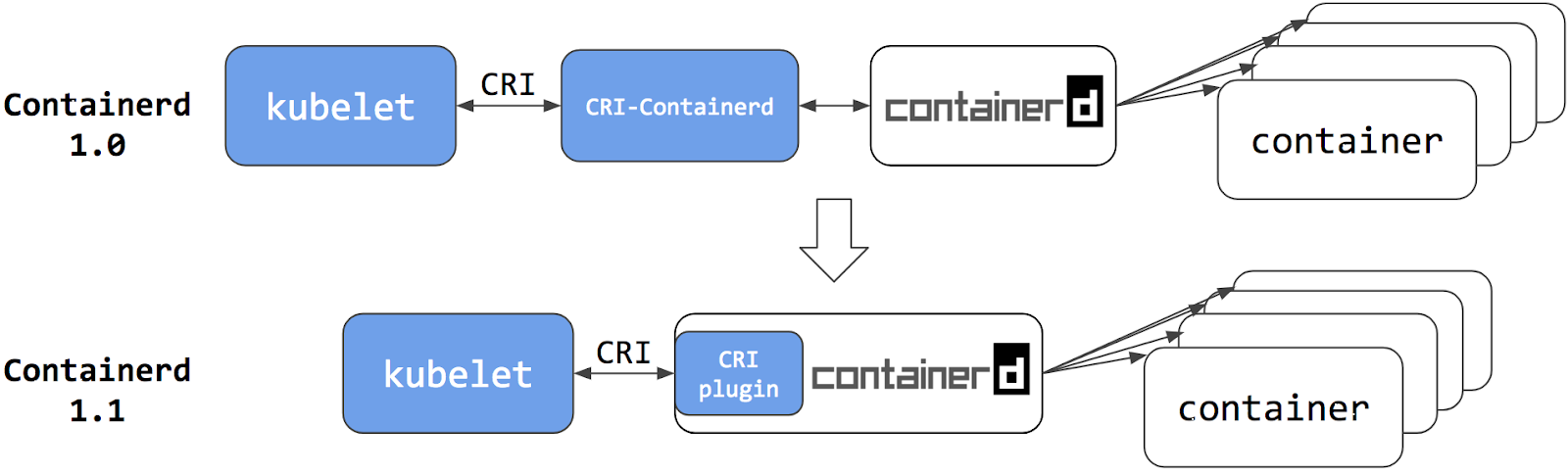

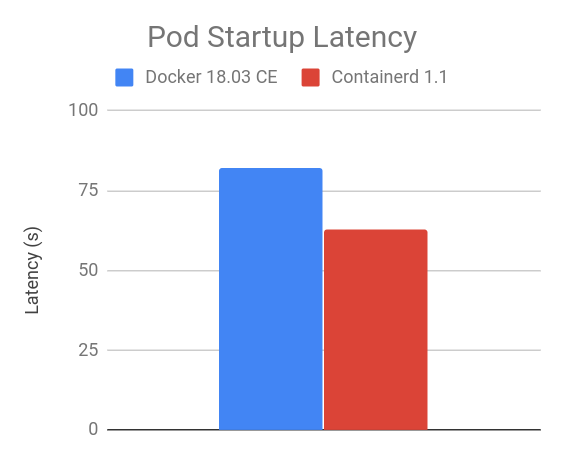

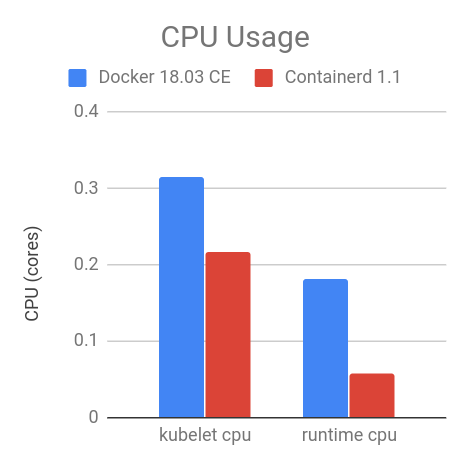

- Kubernetes Containerd Integration Goes GA

- Getting to Know Kubevirt

- Gardener - The Kubernetes Botanist

- Docs are Migrating from Jekyll to Hugo

- Announcing Kubeflow 0.1

- Current State of Policy in Kubernetes

- Developing on Kubernetes

- Zero-downtime Deployment in Kubernetes with Jenkins

- Kubernetes Community - Top of the Open Source Charts in 2017

- Kubernetes Application Survey 2018 Results

- Local Persistent Volumes for Kubernetes Goes Beta

- Migrating the Kubernetes Blog

- Container Storage Interface (CSI) for Kubernetes Goes Beta

- Fixing the Subpath Volume Vulnerability in Kubernetes

- Kubernetes 1.10: Stabilizing Storage, Security, and Networking

- Principles of Container-based Application Design

- Expanding User Support with Office Hours

- How to Integrate RollingUpdate Strategy for TPR in Kubernetes

- Apache Spark 2.3 with Native Kubernetes Support

- Kubernetes: First Beta Version of Kubernetes 1.10 is Here

- Reporting Errors from Control Plane to Applications Using Kubernetes Events

- Core Workloads API GA

- Introducing client-go version 6

- Extensible Admission is Beta

- Introducing Container Storage Interface (CSI) Alpha for Kubernetes

- Kubernetes v1.9 releases beta support for Windows Server Containers

- Five Days of Kubernetes 1.9

- Introducing Kubeflow - A Composable, Portable, Scalable ML Stack Built for Kubernetes

- Kubernetes 1.9: Apps Workloads GA and Expanded Ecosystem

- Using eBPF in Kubernetes

- PaddlePaddle Fluid: Elastic Deep Learning on Kubernetes

- Autoscaling in Kubernetes

- Certified Kubernetes Conformance Program: Launch Celebration Round Up

- Kubernetes is Still Hard (for Developers)

- Securing Software Supply Chain with Grafeas

- Containerd Brings More Container Runtime Options for Kubernetes

- Kubernetes the Easy Way

- Enforcing Network Policies in Kubernetes

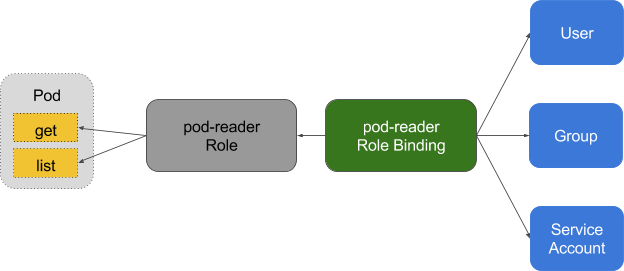

- Using RBAC, Generally Available in Kubernetes v1.8

- It Takes a Village to Raise a Kubernetes

- kubeadm v1.8 Released: Introducing Easy Upgrades for Kubernetes Clusters

- Five Days of Kubernetes 1.8

- Introducing Software Certification for Kubernetes

- Request Routing and Policy Management with the Istio Service Mesh

- Kubernetes Community Steering Committee Election Results

- Kubernetes 1.8: Security, Workloads and Feature Depth

- Kubernetes StatefulSets & DaemonSets Updates

- Introducing the Resource Management Working Group

- Windows Networking at Parity with Linux for Kubernetes

- Kubernetes Meets High-Performance Computing

- High Performance Networking with EC2 Virtual Private Clouds

- Kompose Helps Developers Move Docker Compose Files to Kubernetes

- Happy Second Birthday: A Kubernetes Retrospective

- How Watson Health Cloud Deploys Applications with Kubernetes

- Kubernetes 1.7: Security Hardening, Stateful Application Updates and Extensibility

- Managing microservices with the Istio service mesh

- Draft: Kubernetes container development made easy

- Kubernetes: a monitoring guide

- Kubespray Ansible Playbooks foster Collaborative Kubernetes Ops

- Dancing at the Lip of a Volcano: The Kubernetes Security Process - Explained

- How Bitmovin is Doing Multi-Stage Canary Deployments with Kubernetes in the Cloud and On-Prem

- RBAC Support in Kubernetes

- Configuring Private DNS Zones and Upstream Nameservers in Kubernetes

- Advanced Scheduling in Kubernetes

- Scalability updates in Kubernetes 1.6: 5,000 node and 150,000 pod clusters

- Dynamic Provisioning and Storage Classes in Kubernetes

- Five Days of Kubernetes 1.6

- Kubernetes 1.6: Multi-user, Multi-workloads at Scale

- The K8sPort: Engaging Kubernetes Community One Activity at a Time

- Deploying PostgreSQL Clusters using StatefulSets

- Containers as a Service, the foundation for next generation PaaS

- Inside JD.com's Shift to Kubernetes from OpenStack

- Run Deep Learning with PaddlePaddle on Kubernetes

- Highly Available Kubernetes Clusters

- Fission: Serverless Functions as a Service for Kubernetes

- Running MongoDB on Kubernetes with StatefulSets

- How we run Kubernetes in Kubernetes aka Kubeception

- Scaling Kubernetes deployments with Policy-Based Networking

- A Stronger Foundation for Creating and Managing Kubernetes Clusters

- Kubernetes UX Survey Infographic

- Kubernetes supports OpenAPI

- Cluster Federation in Kubernetes 1.5

- Windows Server Support Comes to Kubernetes

- StatefulSet: Run and Scale Stateful Applications Easily in Kubernetes

- Five Days of Kubernetes 1.5

- Introducing Container Runtime Interface (CRI) in Kubernetes

- Kubernetes 1.5: Supporting Production Workloads

- From Network Policies to Security Policies

- Kompose: a tool to go from Docker-compose to Kubernetes

- Kubernetes Containers Logging and Monitoring with Sematext

- Visualize Kubelet Performance with Node Dashboard

- CNCF Partners With The Linux Foundation To Launch New Kubernetes Certification, Training and Managed Service Provider Program

- Bringing Kubernetes Support to Azure Container Service

- Modernizing the Skytap Cloud Micro-Service Architecture with Kubernetes

- Introducing Kubernetes Service Partners program and a redesigned Partners page

- Tail Kubernetes with Stern

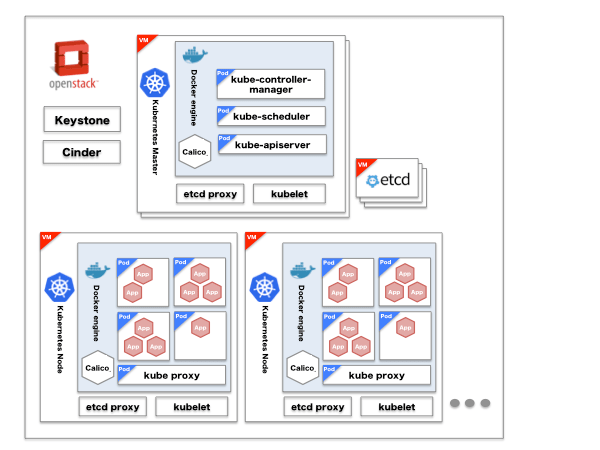

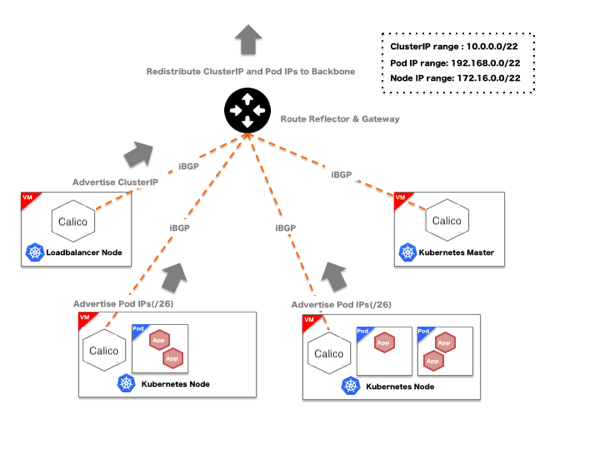

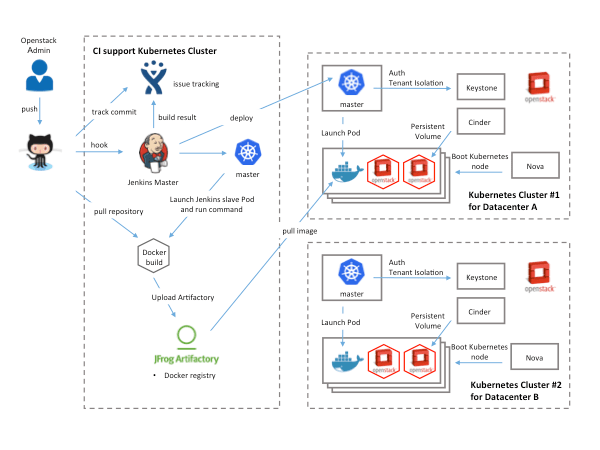

- How We Architected and Run Kubernetes on OpenStack at Scale at Yahoo! JAPAN

- Building Globally Distributed Services using Kubernetes Cluster Federation

- Helm Charts: making it simple to package and deploy common applications on Kubernetes

- Dynamic Provisioning and Storage Classes in Kubernetes

- How we improved Kubernetes Dashboard UI in 1.4 for your production needs

- How we made Kubernetes insanely easy to install

- How Qbox Saved 50% per Month on AWS Bills Using Kubernetes and Supergiant

- Kubernetes 1.4: Making it easy to run on Kubernetes anywhere

- High performance network policies in Kubernetes clusters

- Creating a PostgreSQL Cluster using Helm

- Deploying to Multiple Kubernetes Clusters with kit

- Cloud Native Application Interfaces

- Security Best Practices for Kubernetes Deployment

- Scaling Stateful Applications using Kubernetes Pet Sets and FlexVolumes with Datera Elastic Data Fabric

- Kubernetes Namespaces: use cases and insights

- SIG Apps: build apps for and operate them in Kubernetes

- Create a Couchbase cluster using Kubernetes

- Challenges of a Remotely Managed, On-Premises, Bare-Metal Kubernetes Cluster

- Why OpenStack's embrace of Kubernetes is great for both communities

- A Very Happy Birthday Kubernetes

- Happy Birthday Kubernetes. Oh, the places you’ll go!

- The Bet on Kubernetes, a Red Hat Perspective

- Bringing End-to-End Kubernetes Testing to Azure (Part 2)

- Dashboard - Full Featured Web Interface for Kubernetes

- Steering an Automation Platform at Wercker with Kubernetes

- Citrix + Kubernetes = A Home Run

- Cross Cluster Services - Achieving Higher Availability for your Kubernetes Applications

- Stateful Applications in Containers!? Kubernetes 1.3 Says “Yes!”

- Thousand Instances of Cassandra using Kubernetes Pet Set

- Autoscaling in Kubernetes

- Kubernetes in Rancher: the further evolution

- Five Days of Kubernetes 1.3

- Minikube: easily run Kubernetes locally

- rktnetes brings rkt container engine to Kubernetes

- Updates to Performance and Scalability in Kubernetes 1.3 -- 2,000 node 60,000 pod clusters

- Kubernetes 1.3: Bridging Cloud Native and Enterprise Workloads

- Container Design Patterns

- The Illustrated Children's Guide to Kubernetes

- Bringing End-to-End Kubernetes Testing to Azure (Part 1)

- Hypernetes: Bringing Security and Multi-tenancy to Kubernetes

- CoreOS Fest 2016: CoreOS and Kubernetes Community meet in Berlin (& San Francisco)

- Introducing the Kubernetes OpenStack Special Interest Group

- SIG-UI: the place for building awesome user interfaces for Kubernetes

- SIG-ClusterOps: Promote operability and interoperability of Kubernetes clusters

- SIG-Networking: Kubernetes Network Policy APIs Coming in 1.3

- How to deploy secure, auditable, and reproducible Kubernetes clusters on AWS

- Adding Support for Kubernetes in Rancher

- Container survey results - March 2016

- Configuration management with Containers

- Using Deployment objects with Kubernetes 1.2

- Kubernetes 1.2 and simplifying advanced networking with Ingress

- Using Spark and Zeppelin to process big data on Kubernetes 1.2

- AppFormix: Helping Enterprises Operationalize Kubernetes

- Building highly available applications using Kubernetes new multi-zone clusters (a.k.a. 'Ubernetes Lite')

- 1000 nodes and beyond: updates to Kubernetes performance and scalability in 1.2

- Five Days of Kubernetes 1.2

- How container metadata changes your point of view

- Scaling neural network image classification using Kubernetes with TensorFlow Serving

- Kubernetes 1.2: Even more performance upgrades, plus easier application deployment and management

- ElasticBox introduces ElasticKube to help manage Kubernetes within the enterprise

- Kubernetes in the Enterprise with Fujitsu’s Cloud Load Control

- Kubernetes Community Meeting Notes - 20160225

- State of the Container World, February 2016

- KubeCon EU 2016: Kubernetes Community in London

- Kubernetes Community Meeting Notes - 20160218

- Kubernetes Community Meeting Notes - 20160211

- ShareThis: Kubernetes In Production

- Kubernetes Community Meeting Notes - 20160204

- Kubernetes Community Meeting Notes - 20160128

- State of the Container World, January 2016

- Kubernetes Community Meeting Notes - 20160114

- Kubernetes Community Meeting Notes - 20160121

- Why Kubernetes doesn’t use libnetwork

- Simple leader election with Kubernetes and Docker

- Creating a Raspberry Pi cluster running Kubernetes, the installation (Part 2)

- Managing Kubernetes Pods, Services and Replication Controllers with Puppet

- How Weave built a multi-deployment solution for Scope using Kubernetes

- Creating a Raspberry Pi cluster running Kubernetes, the shopping list (Part 1)

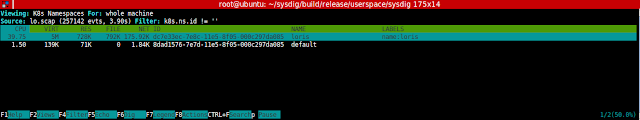

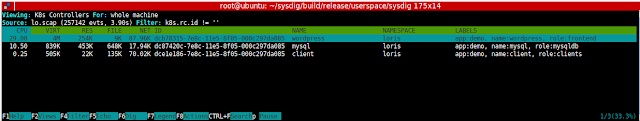

- Monitoring Kubernetes with Sysdig

- One million requests per second: Dependable and dynamic distributed systems at scale

- Kubernetes 1.1 Performance upgrades, improved tooling and a growing community

- Kubernetes as Foundation for Cloud Native PaaS

- Some things you didn’t know about kubectl

- Kubernetes Performance Measurements and Roadmap

- Using Kubernetes Namespaces to Manage Environments

- Weekly Kubernetes Community Hangout Notes - July 31 2015

- The Growing Kubernetes Ecosystem

- Weekly Kubernetes Community Hangout Notes - July 17 2015

- Strong, Simple SSL for Kubernetes Services

- Weekly Kubernetes Community Hangout Notes - July 10 2015

- Announcing the First Kubernetes Enterprise Training Course

- How did the Quake demo from DockerCon Work?

- Kubernetes 1.0 Launch Event at OSCON

- The Distributed System ToolKit: Patterns for Composite Containers

- Slides: Cluster Management with Kubernetes, talk given at the University of Edinburgh

- Cluster Level Logging with Kubernetes

- Weekly Kubernetes Community Hangout Notes - May 22 2015

- Kubernetes on OpenStack

- Docker and Kubernetes and AppC

- Weekly Kubernetes Community Hangout Notes - May 15 2015

- Kubernetes Release: 0.17.0

- Resource Usage Monitoring in Kubernetes

- Weekly Kubernetes Community Hangout Notes - May 1 2015

- Kubernetes Release: 0.16.0

- AppC Support for Kubernetes through RKT

- Weekly Kubernetes Community Hangout Notes - April 24 2015

- Borg: The Predecessor to Kubernetes

- Kubernetes and the Mesosphere DCOS

- Weekly Kubernetes Community Hangout Notes - April 17 2015

- Introducing Kubernetes API Version v1beta3

- Kubernetes Release: 0.15.0

- Weekly Kubernetes Community Hangout Notes - April 10 2015

- Faster than a speeding Latte

- Weekly Kubernetes Community Hangout Notes - April 3 2015

- Participate in a Kubernetes User Experience Study

- Weekly Kubernetes Community Hangout Notes - March 27 2015

- Kubernetes Gathering Videos

- Welcome to the Kubernetes Blog!

Kubernetes v1.31: kubeadm v1beta4

As part of the Kubernetes v1.31 release, kubeadm is

adopting a new (v1beta4) version of

its configuration file format. Configuration in the previous v1beta3 format is now formally

deprecated, which means it's supported but you should migrate to v1beta4 and stop using

the deprecated format.

Support for v1beta3 configuration will be removed after a minimum of 3 Kubernetes minor releases.

In this article, I'll walk you through key changes; I'll explain about the kubeadm v1beta4 configuration format, and how to migrate from v1beta3 to v1beta4.

You can read the reference for the v1beta4 configuration format: kubeadm Configuration (v1beta4).

A list of changes since v1beta3

This version improves on the v1beta3 format by fixing some minor issues and adding a few new fields.

To put it simply,

- Two new configuration elements: ResetConfiguration and UpgradeConfiguration

- For InitConfiguration and JoinConfiguration,

dryRunmode andnodeRegistration.imagePullSerialare supported - For ClusterConfiguration, there are new fields including

certificateValidityPeriod,caCertificateValidityPeriod,encryptionAlgorithm,dns.disabledandproxy.disabled. - Support

extraEnvsfor all control plan components extraArgschanged from a map to structured extra arguments for duplicates- Add a

timeoutsstructure for init, join, upgrade and reset.

For details, you can see the official document below:

- Support custom environment variables in control plane components under

ClusterConfiguration. UseapiServer.extraEnvs,controllerManager.extraEnvs,scheduler.extraEnvs,etcd.local.extraEnvs. - The ResetConfiguration API type is now supported in v1beta4. Users are able to reset a node by passing

a

--configfile tokubeadm reset. dryRunmode is now configurable in InitConfiguration and JoinConfiguration.- Replace the existing string/string extra argument maps with structured extra arguments that support duplicates.

The change applies to

ClusterConfiguration-apiServer.extraArgs,controllerManager.extraArgs,scheduler.extraArgs,etcd.local.extraArgs. Also tonodeRegistrationOptions.kubeletExtraArgs. - Added

ClusterConfiguration.encryptionAlgorithmthat can be used to set the asymmetric encryption algorithm used for this cluster's keys and certificates. Can be one of "RSA-2048" (default), "RSA-3072", "RSA-4096" or "ECDSA-P256". - Added

ClusterConfiguration.dns.disabledandClusterConfiguration.proxy.disabledthat can be used to disable the CoreDNS and kube-proxy addons during cluster initialization. Skipping the related addons phases, during cluster creation will set the same fields totrue. - Added the

nodeRegistration.imagePullSerialfield inInitConfigurationandJoinConfiguration, which can be used to control if kubeadm pulls images serially or in parallel. - The UpgradeConfiguration kubeadm API is now supported in v1beta4 when passing

--configtokubeadm upgradesubcommands. For upgrade subcommands, the usage of component configuration for kubelet and kube-proxy, as well as InitConfiguration and ClusterConfiguration, is now deprecated and will be ignored when passing--config. - Added a

timeoutsstructure toInitConfiguration,JoinConfiguration,ResetConfigurationandUpgradeConfigurationthat can be used to configure various timeouts. TheClusterConfiguration.timeoutForControlPlanefield is replaced bytimeouts.controlPlaneComponentHealthCheck. TheJoinConfiguration.discovery.timeoutis replaced bytimeouts.discovery. - Added a

certificateValidityPeriodandcaCertificateValidityPeriodfields toClusterConfiguration. These fields can be used to control the validity period of certificates generated by kubeadm during sub-commands such asinit,join,upgradeandcerts. Default values continue to be 1 year for non-CA certificates and 10 years for CA certificates. Also note that only non-CA certificates are renewable bykubeadm certs renew.

These changes simplify the configuration of tools that use kubeadm and improve the extensibility of kubeadm itself.

How to migrate v1beta3 configuration to v1beta4?

If your configuration is not using the latest version, it is recommended that you migrate using the kubeadm config migrate command.

This command reads an existing configuration file that uses the old format, and writes a new file that uses the current format.

Example

Using kubeadm v1.31, run kubeadm config migrate --old-config old-v1beta3.yaml --new-config new-v1beta4.yaml

How do I get involved?

Huge thanks to all the contributors who helped with the design, implementation, and review of this feature:

- Lubomir I. Ivanov (neolit123)

- Dave Chen(chendave)

- Paco Xu (pacoxu)

- Sata Qiu(sataqiu)

- Baofa Fan(carlory)

- Calvin Chen(calvin0327)

- Ruquan Zhao(ruquanzhao)

For those interested in getting involved in future discussions on kubeadm configuration, you can reach out kubeadm or SIG-cluster-lifecycle by several means:

- v1beta4 related items are tracked in kubeadm issue #2890.

- Slack: #kubeadm or #sig-cluster-lifecycle

- Mailing list

Kubernetes 1.31: Custom Profiling in Kubectl Debug Graduates to Beta

There are many ways of troubleshooting the pods and nodes in the cluster. However, kubectl debug is one of the easiest, highly used and most prominent ones. It

provides a set of static profiles and each profile serves for a different kind of role. For instance, from the network administrator's point of view,

debugging the node should be as easy as this:

$ kubectl debug node/mynode -it --image=busybox --profile=netadmin

On the other hand, static profiles also bring about inherent rigidity, which has some implications for some pods contrary to their ease of use. Because there are various kinds of pods (or nodes) that all have their specific necessities, and unfortunately, some can't be debugged by only using the static profiles.

Take an instance of a simple pod consisting of a container whose healthiness relies on an environment variable:

apiVersion: v1

kind: Pod

metadata:

name: example-pod

spec:

containers:

- name: example-container

image: customapp:latest

env:

- name: REQUIRED_ENV_VAR

value: "value1"

Currently, copying the pod is the sole mechanism that supports debugging this pod in kubectl debug. Furthermore, what if user needs to modify the REQUIRED_ENV_VAR to something different

for advanced troubleshooting?. There is no mechanism to achieve this.

Custom Profiling

Custom profiling is a new functionality available under --custom flag, introduced in kubectl debug to provide extensibility. It expects partial Container spec in either YAML or JSON format.

In order to debug the example-container above by creating an ephemeral container, we simply have to define this YAML:

# partial_container.yaml

env:

- name: REQUIRED_ENV_VAR

value: value2

and execute:

kubectl debug example-pod -it --image=customapp --custom=partial_container.yaml

Here is another example that modifies multiple fields at once (change port number, add resource limits, modify environment variable) in JSON:

{

"ports": [

{

"containerPort": 80

}

],

"resources": {

"limits": {

"cpu": "0.5",

"memory": "512Mi"

},

"requests": {

"cpu": "0.2",

"memory": "256Mi"

}

},

"env": [

{

"name": "REQUIRED_ENV_VAR",

"value": "value2"

}

]

}

Constraints

Uncontrolled extensibility hurts the usability. So that, custom profiling is not allowed for certain fields such as command, image, lifecycle, volume devices and container name. In the future, more fields can be added to the disallowed list if required.

Limitations

The kubectl debug command has 3 aspects: Debugging with ephemeral containers, pod copying, and node debugging. The largest intersection set of these aspects is the container spec within a Pod

That's why, custom profiling only supports the modification of the fields that are defined with containers. This leads to a limitation that if user needs to modify the other fields in the Pod spec, it is not supported.

Acknowledgments

Special thanks to all the contributors who reviewed and commented on this feature, from the initial conception to its actual implementation (alphabetical order):

Kubernetes 1.31: Fine-grained SupplementalGroups control

This blog discusses a new feature in Kubernetes 1.31 to improve the handling of supplementary groups in containers within Pods.

Motivation: Implicit group memberships defined in /etc/group in the container image

Although this behavior may not be popular with many Kubernetes cluster users/admins, kubernetes, by default, merges group information from the Pod with information defined in /etc/group in the container image.

Let's see an example, below Pod specifies runAsUser=1000, runAsGroup=3000 and supplementalGroups=4000 in the Pod's security context.

apiVersion: v1

kind: Pod

metadata:

name: implicit-groups

spec:

securityContext:

runAsUser: 1000

runAsGroup: 3000

supplementalGroups: [4000]

containers:

- name: ctr

image: registry.k8s.io/e2e-test-images/agnhost:2.45

command: [ "sh", "-c", "sleep 1h" ]

securityContext:

allowPrivilegeEscalation: false

What is the result of id command in the ctr container?

# Create the Pod:

$ kubectl apply -f https://k8s.io/blog/2024-08-22-Fine-grained-SupplementalGroups-control/implicit-groups.yaml

# Verify that the Pod's Container is running:

$ kubectl get pod implicit-groups

# Check the id command

$ kubectl exec implicit-groups -- id

Then, output should be similar to this:

uid=1000 gid=3000 groups=3000,4000,50000

Where does group ID 50000 in supplementary groups (groups field) come from, even though 50000 is not defined in the Pod's manifest at all? The answer is /etc/group file in the container image.

Checking the contents of /etc/group in the container image should show below:

$ kubectl exec implicit-groups -- cat /etc/group

...

user-defined-in-image:x:1000:

group-defined-in-image:x:50000:user-defined-in-image

Aha! The container's primary user 1000 belongs to the group 50000 in the last entry.

Thus, the group membership defined in /etc/group in the container image for the container's primary user is implicitly merged to the information from the Pod. Please note that this was a design decision the current CRI implementations inherited from Docker, and the community never really reconsidered it until now.

What's wrong with it?

The implicitly merged group information from /etc/group in the container image may cause some concerns particularly in accessing volumes (see kubernetes/kubernetes#112879 for details) because file permission is controlled by uid/gid in Linux. Even worse, the implicit gids from /etc/group can not be detected/validated by any policy engines because there is no clue for the implicit group information in the manifest. This can also be a concern for Kubernetes security.

Fine-grained SupplementalGroups control in a Pod: SupplementaryGroupsPolicy

To tackle the above problem, Kubernetes 1.31 introduces new field supplementalGroupsPolicy in Pod's .spec.securityContext.

This field provies a way to control how to calculate supplementary groups for the container processes in a Pod. The available policy is below:

Merge: The group membership defined in

/etc/groupfor the container's primary user will be merged. If not specified, this policy will be applied (i.e. as-is behavior for backword compatibility).Strict: it only attaches specified group IDs in

fsGroup,supplementalGroups, orrunAsGroupfields as the supplementary groups of the container processes. This means no group membership defined in/etc/groupfor the container's primary user will be merged.

Let's see how Strict policy works.

apiVersion: v1

kind: Pod

metadata:

name: strict-supplementalgroups-policy

spec:

securityContext:

runAsUser: 1000

runAsGroup: 3000

supplementalGroups: [4000]

supplementalGroupsPolicy: Strict

containers:

- name: ctr

image: registry.k8s.io/e2e-test-images/agnhost:2.45

command: [ "sh", "-c", "sleep 1h" ]

securityContext:

allowPrivilegeEscalation: false

# Create the Pod:

$ kubectl apply -f https://k8s.io/blog/2024-08-22-Fine-grained-SupplementalGroups-control/strict-supplementalgroups-policy.yaml

# Verify that the Pod's Container is running:

$ kubectl get pod strict-supplementalgroups-policy

# Check the process identity:

kubectl exec -it strict-supplementalgroups-policy -- id

The output should be similar to this:

uid=1000 gid=3000 groups=3000,4000

You can see Strict policy can exclude group 50000 from groups!

Thus, ensuring supplementalGroupsPolicy: Strict (enforced by some policy mechanism) helps prevent the implicit supplementary groups in a Pod.

Note:

Actually, this is not enough because container with sufficient privileges / capability can change its process identity. Please see the following section for details.Attached process identity in Pod status

This feature also exposes the process identity attached to the first container process of the container

via .status.containerStatuses[].user.linux field. It would be helpful to see if implicit group IDs are attached.

...

status:

containerStatuses:

- name: ctr

user:

linux:

gid: 3000

supplementalGroups:

- 3000

- 4000

uid: 1000

...

Note:

Please note that the values instatus.containerStatuses[].user.linux field is the firstly attached

process identity to the first container process in the container. If the container has sufficient privilege

to call system calls related to process identity (e.g. setuid(2), setgid(2) or setgroups(2), etc.), the container process can change its identity. Thus, the actual process identity will be dynamic.Feature availability

To enable supplementalGroupsPolicy field, the following components have to be used:

- Kubernetes: v1.31 or later, with the

SupplementalGroupsPolicyfeature gate enabled. As of v1.31, the gate is marked as alpha. - CRI runtime:

- containerd: v2.0 or later

- CRI-O: v1.31 or later

You can see if the feature is supported in the Node's .status.features.supplementalGroupsPolicy field.

apiVersion: v1

kind: Node

...

status:

features:

supplementalGroupsPolicy: true

What's next?

Kubernetes SIG Node hope - and expect - that the feature will be promoted to beta and eventually general availability (GA) in future releases of Kubernetes, so that users no longer need to enable the feature gate manually.

Merge policy is applied when supplementalGroupsPolicy is not specified, for backwards compatibility.

How can I learn more?

- Configure a Security Context for a Pod or Container

for the further details of

supplementalGroupsPolicy - KEP-3619: Fine-grained SupplementalGroups control

How to get involved?

This feature is driven by the SIG Node community. Please join us to connect with the community and share your ideas and feedback around the above feature and beyond. We look forward to hearing from you!

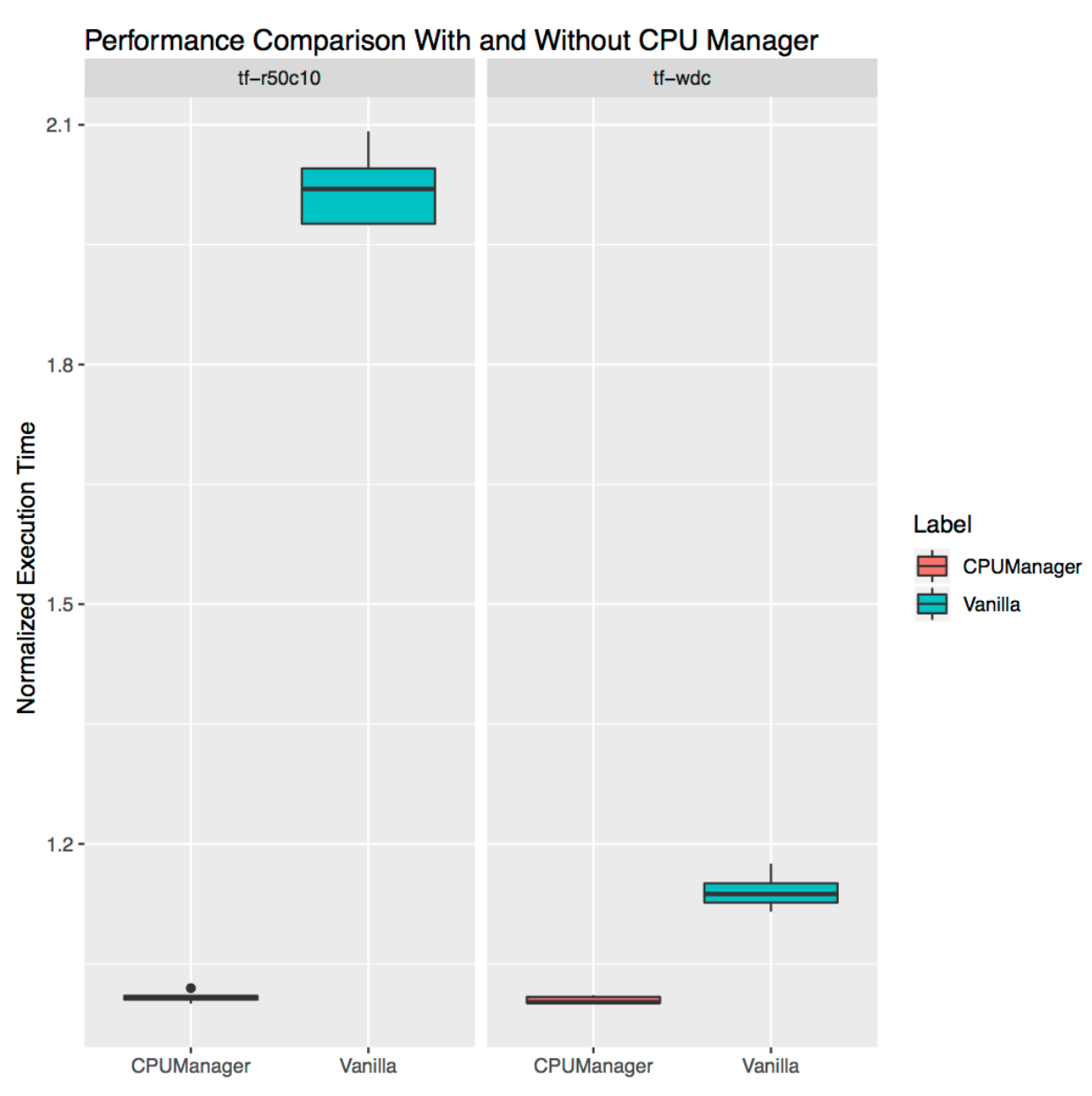

Kubernetes v1.31: New Kubernetes CPUManager Static Policy: Distribute CPUs Across Cores

In Kubernetes v1.31, we are excited to introduce a significant enhancement to CPU management capabilities: the distribute-cpus-across-cores option for the CPUManager static policy. This feature is currently in alpha and hidden by default, marking a strategic shift aimed at optimizing CPU utilization and improving system performance across multi-core processors.

Understanding the feature

Traditionally, Kubernetes' CPUManager tends to allocate CPUs as compactly as possible, typically packing them onto the fewest number of physical cores. However, allocation strategy matters, CPUs on the same physical host still share some resources of the physical core, such as the cache and execution units, etc.

While default approach minimizes inter-core communication and can be beneficial under certain scenarios, it also poses a challenge. CPUs sharing a physical core can lead to resource contention, which in turn may cause performance bottlenecks, particularly noticeable in CPU-intensive applications.

The new distribute-cpus-across-cores feature addresses this issue by modifying the allocation strategy. When enabled, this policy option instructs the CPUManager to spread out the CPUs (hardware threads) across as many physical cores as possible. This distribution is designed to minimize contention among CPUs sharing the same physical core, potentially enhancing the performance of applications by providing them dedicated core resources.

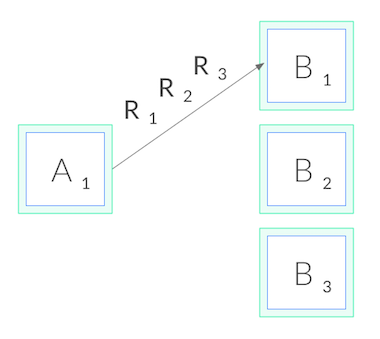

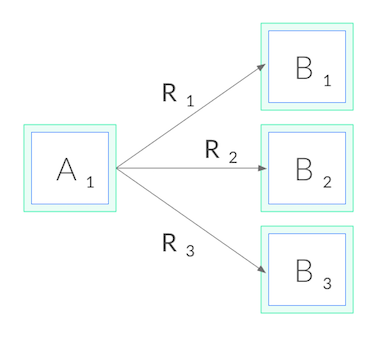

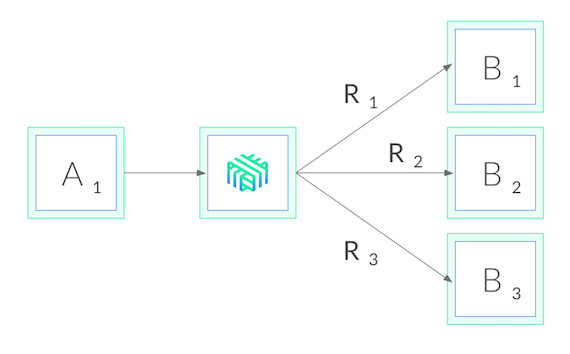

Technically, within this static policy, the free CPU list is reordered in the manner depicted in the diagram, aiming to allocate CPUs from separate physical cores.

Enabling the feature

To enable this feature, users firstly need to add --cpu-manager-policy=static kubelet flag or the cpuManagerPolicy: static field in KubeletConfiuration. Then user can add --cpu-manager-policy-options distribute-cpus-across-cores=true or distribute-cpus-across-cores=true to their CPUManager policy options in the Kubernetes configuration or. This setting directs the CPUManager to adopt the new distribution strategy. It is important to note that this policy option cannot currently be used in conjunction with full-pcpus-only or distribute-cpus-across-numa options.

Current limitations and future directions

As with any new feature, especially one in alpha, there are limitations and areas for future improvement. One significant current limitation is that distribute-cpus-across-cores cannot be combined with other policy options that might conflict in terms of CPU allocation strategies. This restriction can affect compatibility with certain workloads and deployment scenarios that rely on more specialized resource management.

Looking forward, we are committed to enhancing the compatibility and functionality of the distribute-cpus-across-cores option. Future updates will focus on resolving these compatibility issues, allowing this policy to be combined with other CPUManager policies seamlessly. Our goal is to provide a more flexible and robust CPU allocation framework that can adapt to a variety of workloads and performance demands.

Conclusion

The introduction of the distribute-cpus-across-cores policy in Kubernetes CPUManager is a step forward in our ongoing efforts to refine resource management and improve application performance. By reducing the contention on physical cores, this feature offers a more balanced approach to CPU resource allocation, particularly beneficial for environments running heterogeneous workloads. We encourage Kubernetes users to test this new feature and provide feedback, which will be invaluable in shaping its future development.

This draft aims to clearly explain the new feature while setting expectations for its current stage and future improvements.

Further reading

Please check out the Control CPU Management Policies on the Node task page to learn more about the CPU Manager, and how it fits in relation to the other node-level resource managers.

Getting involved

This feature is driven by the SIG Node. If you are interested in helping develop this feature, sharing feedback, or participating in any other ongoing SIG Node projects, please attend the SIG Node meeting for more details.

Kubernetes 1.31: Autoconfiguration For Node Cgroup Driver (beta)

Historically, configuring the correct cgroup driver has been a pain point for users running new

Kubernetes clusters. On Linux systems, there are two different cgroup drivers:

cgroupfs and systemd. In the past, both the kubelet

and CRI implementation (like CRI-O or containerd) needed to be configured to use

the same cgroup driver, or else the kubelet would exit with an error. This was a

source of headaches for many cluster admins. However, there is light at the end of the tunnel!

Automated cgroup driver detection

In v1.28.0, the SIG Node community introduced the feature gate

KubeletCgroupDriverFromCRI, which instructs the kubelet to ask the CRI

implementation which cgroup driver to use. A few minor releases of Kubernetes

happened whilst we waited for support to land in the major two CRI implementations

(containerd and CRI-O), but as of v1.31.0, this feature is now beta!

In addition to setting the feature gate, a cluster admin needs to ensure their CRI implementation is new enough:

- containerd: Support was added in v2.0.0

- CRI-O: Support was added in v1.28.0

Then, they should ensure their CRI implementation is configured to the cgroup_driver they would like to use.

Future work

Eventually, support for the kubelet's cgroupDriver configuration field will be

dropped, and the kubelet will fail to start if the CRI implementation isn't new

enough to have support for this feature.

Kubernetes 1.31: Streaming Transitions from SPDY to WebSockets

In Kubernetes 1.31, by default kubectl now uses the WebSocket protocol instead of SPDY for streaming.

This post describes what these changes mean for you and why these streaming APIs matter.

Streaming APIs in Kubernetes

In Kubernetes, specific endpoints that are exposed as an HTTP or RESTful interface are upgraded to streaming connections, which require a streaming protocol. Unlike HTTP, which is a request-response protocol, a streaming protocol provides a persistent connection that's bi-directional, low-latency, and lets you interact in real-time. Streaming protocols support reading and writing data between your client and the server, in both directions, over the same connection. This type of connection is useful, for example, when you create a shell in a running container from your local workstation and run commands in the container.

Why change the streaming protocol?

Before the v1.31 release, Kubernetes used the SPDY/3.1 protocol by default when

upgrading streaming connections. SPDY/3.1 has been deprecated for eight years,

and it was never standardized. Many modern proxies, gateways, and load balancers

no longer support the protocol. As a result, you might notice that commands like

kubectl cp, kubectl attach, kubectl exec, and kubectl port-forward

stop working when you try to access your cluster through a proxy or gateway.

As of Kubernetes v1.31, SIG API Machinery has modified the streaming

protocol that a Kubernetes client (such as kubectl) uses for these commands

to the more modern WebSocket streaming protocol.

The WebSocket protocol is a currently supported standardized streaming protocol

that guarantees compatibility and interoperability with different components and

programming languages. The WebSocket protocol is more widely supported by modern

proxies and gateways than SPDY.

How streaming APIs work

Kubernetes upgrades HTTP connections to streaming connections by adding

specific upgrade headers to the originating HTTP request. For example, an HTTP

upgrade request for running the date command on an nginx container within

a cluster is similar to the following:

$ kubectl exec -v=8 nginx -- date

GET https://127.0.0.1:43251/api/v1/namespaces/default/pods/nginx/exec?command=date…

Request Headers:

Connection: Upgrade

Upgrade: websocket

Sec-Websocket-Protocol: v5.channel.k8s.io

User-Agent: kubectl/v1.31.0 (linux/amd64) kubernetes/6911225

If the container runtime supports the WebSocket streaming protocol and at least

one of the subprotocol versions (e.g. v5.channel.k8s.io), the server responds

with a successful 101 Switching Protocols status, along with the negotiated

subprotocol version:

Response Status: 101 Switching Protocols in 3 milliseconds

Response Headers:

Upgrade: websocket

Connection: Upgrade

Sec-Websocket-Accept: j0/jHW9RpaUoGsUAv97EcKw8jFM=

Sec-Websocket-Protocol: v5.channel.k8s.io

At this point the TCP connection used for the HTTP protocol has changed to a streaming connection. Subsequent STDIN, STDOUT, and STDERR data (as well as terminal resizing data and process exit code data) for this shell interaction is then streamed over this upgraded connection.

How to use the new WebSocket streaming protocol

If your cluster and kubectl are on version 1.29 or later, there are two control plane feature gates and two kubectl environment variables that govern the use of the WebSockets rather than SPDY. In Kubernetes 1.31, all of the following feature gates are in beta and are enabled by default:

- Feature gates

TranslateStreamCloseWebsocketRequests.../exec.../attach

PortForwardWebsockets.../port-forward

- kubectl feature control environment variables

KUBECTL_REMOTE_COMMAND_WEBSOCKETSkubectl execkubectl cpkubectl attach

KUBECTL_PORT_FORWARD_WEBSOCKETSkubectl port-forward

If you're connecting to an older cluster but can manage the feature gate

settings, turn on both TranslateStreamCloseWebsocketRequests (added in

Kubernetes v1.29) and PortForwardWebsockets (added in Kubernetes

v1.30) to try this new behavior. Version 1.31 of kubectl can automatically use

the new behavior, but you do need to connect to a cluster where the server-side

features are explicitly enabled.

Learn more about streaming APIs

Kubernetes 1.31: Pod Failure Policy for Jobs Goes GA

This post describes Pod failure policy, which graduates to stable in Kubernetes 1.31, and how to use it in your Jobs.

About Pod failure policy

When you run workloads on Kubernetes, Pods might fail for a variety of reasons. Ideally, workloads like Jobs should be able to ignore transient, retriable failures and continue running to completion.

To allow for these transient failures, Kubernetes Jobs include the backoffLimit

field, which lets you specify a number of Pod failures that you're willing to tolerate

during Job execution. However, if you set a large value for the backoffLimit field

and rely solely on this field, you might notice unnecessary increases in operating

costs as Pods restart excessively until the backoffLimit is met.

This becomes particularly problematic when running large-scale Jobs with thousands of long-running Pods across thousands of nodes.

The Pod failure policy extends the backoff limit mechanism to help you reduce costs in the following ways:

- Gives you control to fail the Job as soon as a non-retriable Pod failure occurs.

- Allows you to ignore retriable errors without increasing the

backoffLimitfield.

For example, you can use a Pod failure policy to run your workload on more affordable spot machines by ignoring Pod failures caused by graceful node shutdown.

The policy allows you to distinguish between retriable and non-retriable Pod failures based on container exit codes or Pod conditions in a failed Pod.

How it works

You specify a Pod failure policy in the Job specification, represented as a list of rules.

For each rule you define match requirements based on one of the following properties:

- Container exit codes: the

onExitCodesproperty. - Pod conditions: the

onPodConditionsproperty.

Additionally, for each rule, you specify one of the following actions to take when a Pod matches the rule:

Ignore: Do not count the failure towards thebackoffLimitorbackoffLimitPerIndex.FailJob: Fail the entire Job and terminate all running Pods.FailIndex: Fail the index corresponding to the failed Pod. This action works with the Backoff limit per index feature.Count: Count the failure towards thebackoffLimitorbackoffLimitPerIndex. This is the default behavior.

When Pod failures occur in a running Job, Kubernetes matches the failed Pod status against the list of Pod failure policy rules, in the specified order, and takes the corresponding actions for the first matched rule.

Note that when specifying the Pod failure policy, you must also set the Job's

Pod template with restartPolicy: Never. This prevents race conditions between

the kubelet and the Job controller when counting Pod failures.

Kubernetes-initiated Pod disruptions

To allow matching Pod failure policy rules against failures caused by

disruptions initiated by Kubernetes, this feature introduces the DisruptionTarget

Pod condition.

Kubernetes adds this condition to any Pod, regardless of whether it's managed by

a Job controller, that fails because of a retriable

disruption scenario.

The DisruptionTarget condition contains one of the following reasons that

corresponds to these disruption scenarios:

PreemptionByKubeScheduler: Preemption bykube-schedulerto accommodate a new Pod that has a higher priority.DeletionByTaintManager- the Pod is due to be deleted bykube-controller-managerdue to aNoExecutetaint that the Pod doesn't tolerate.EvictionByEvictionAPI- the Pod is due to be deleted by an API-initiated eviction.DeletionByPodGC- the Pod is bound to a node that no longer exists, and is due to be deleted by Pod garbage collection.TerminationByKubelet- the Pod was terminated by graceful node shutdown, node pressure eviction or preemption for system critical pods.

In all other disruption scenarios, like eviction due to exceeding

Pod container limits,

Pods don't receive the DisruptionTarget condition because the disruptions were

likely caused by the Pod and would reoccur on retry.

Example

The Pod failure policy snippet below demonstrates an example use:

podFailurePolicy:

rules:

- action: Ignore

onPodConditions:

- type: DisruptionTarget

- action: FailJob

onPodConditions:

- type: ConfigIssue

- action: FailJob

onExitCodes:

operator: In

values: [ 42 ]

In this example, the Pod failure policy does the following:

- Ignores any failed Pods that have the built-in

DisruptionTargetcondition. These Pods don't count towards Job backoff limits. - Fails the Job if any failed Pods have the custom user-supplied

ConfigIssuecondition, which was added either by a custom controller or webhook. - Fails the Job if any containers exited with the exit code 42.

- Counts all other Pod failures towards the default

backoffLimit(orbackoffLimitPerIndexif used).

Learn more

- For a hands-on guide to using Pod failure policy, see Handling retriable and non-retriable pod failures with Pod failure policy

- Read the documentation for Pod failure policy and Backoff limit per index

- Read the documentation for Pod disruption conditions

- Read the KEP for Pod failure policy

Related work

Based on the concepts introduced by Pod failure policy, the following additional work is in progress:

- JobSet integration: Configurable Failure Policy API

- Pod failure policy extension to add more granular failure reasons

- Support for Pod failure policy via JobSet in Kubeflow Training v2

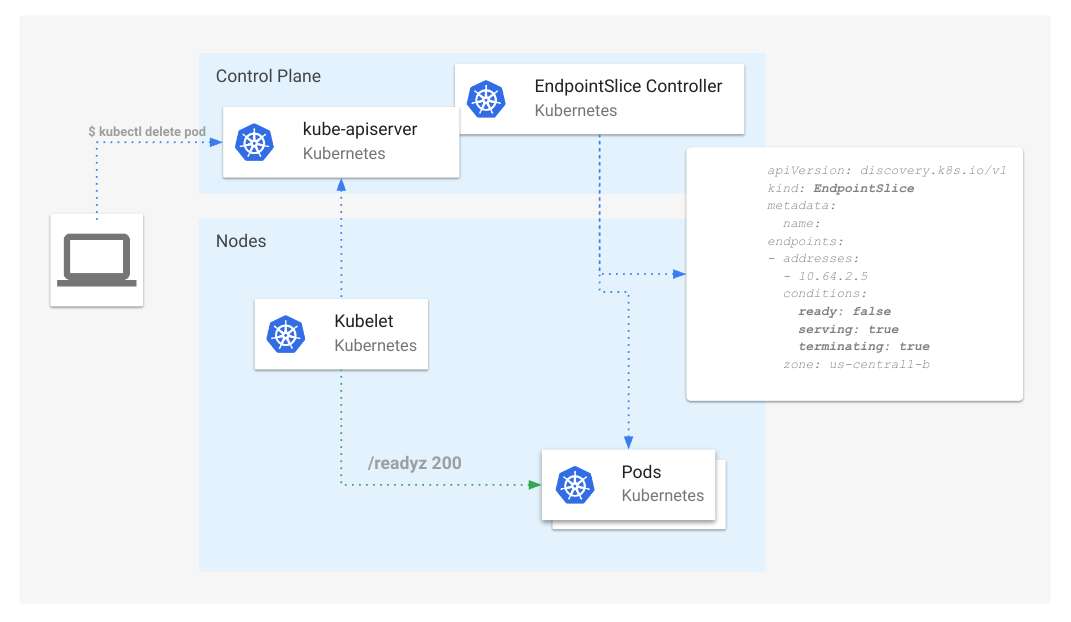

- Proposal: Disrupted Pods should be removed from endpoints

Get involved

This work was sponsored by batch working group in close collaboration with the SIG Apps, and SIG Node, and SIG Scheduling communities.

If you are interested in working on new features in the space we recommend subscribing to our Slack channel and attending the regular community meetings.

Acknowledgments

I would love to thank everyone who was involved in this project over the years - it's been a journey and a joint community effort! The list below is my best-effort attempt to remember and recognize people who made an impact. Thank you!

- Aldo Culquicondor for guidance and reviews throughout the process

- Jordan Liggitt for KEP and API reviews

- David Eads for API reviews

- Maciej Szulik for KEP reviews from SIG Apps PoV

- Clayton Coleman for guidance and SIG Node reviews

- Sergey Kanzhelev for KEP reviews from SIG Node PoV

- Dawn Chen for KEP reviews from SIG Node PoV

- Daniel Smith for reviews from SIG API machinery PoV

- Antoine Pelisse for reviews from SIG API machinery PoV

- John Belamaric for PRR reviews

- Filip Křepinský for thorough reviews from SIG Apps PoV and bug-fixing

- David Porter for thorough reviews from SIG Node PoV

- Jensen Lo for early requirements discussions, testing and reporting issues

- Daniel Vega-Myhre for advancing JobSet integration and reporting issues

- Abdullah Gharaibeh for early design discussions and guidance

- Antonio Ojea for test reviews

- Yuki Iwai for reviews and aligning implementation of the closely related Job features

- Kevin Hannon for reviews and aligning implementation of the closely related Job features

- Tim Bannister for docs reviews

- Shannon Kularathna for docs reviews

- Paola Cortés for docs reviews

Kubernetes 1.31: MatchLabelKeys in PodAffinity graduates to beta

Kubernetes 1.29 introduced new fields matchLabelKeys and mismatchLabelKeys in podAffinity and podAntiAffinity.

In Kubernetes 1.31, this feature moves to beta and the corresponding feature gate (MatchLabelKeysInPodAffinity) gets enabled by default.

matchLabelKeys - Enhanced scheduling for versatile rolling updates

During a workload's (e.g., Deployment) rolling update, a cluster may have Pods from multiple versions at the same time.

However, the scheduler cannot distinguish between old and new versions based on the labelSelector specified in podAffinity or podAntiAffinity. As a result, it will co-locate or disperse Pods regardless of their versions.

This can lead to sub-optimal scheduling outcome, for example:

- New version Pods are co-located with old version Pods (

podAffinity), which will eventually be removed after rolling updates. - Old version Pods are distributed across all available topologies, preventing new version Pods from finding nodes due to

podAntiAffinity.

matchLabelKeys is a set of Pod label keys and addresses this problem.

The scheduler looks up the values of these keys from the new Pod's labels and combines them with labelSelector

so that podAffinity matches Pods that have the same key-value in labels.

By using label pod-template-hash in matchLabelKeys,

you can ensure that only Pods of the same version are evaluated for podAffinity or podAntiAffinity.

apiVersion: apps/v1

kind: Deployment

metadata:

name: application-server

...

affinity:

podAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- database

topologyKey: topology.kubernetes.io/zone

matchLabelKeys:

- pod-template-hash

The above matchLabelKeys will be translated in Pods like:

kind: Pod

metadata:

name: application-server

labels:

pod-template-hash: xyz

...

affinity:

podAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- database

- key: pod-template-hash # Added from matchLabelKeys; Only Pods from the same replicaset will match this affinity.

operator: In

values:

- xyz

topologyKey: topology.kubernetes.io/zone

matchLabelKeys:

- pod-template-hash

mismatchLabelKeys - Service isolation

mismatchLabelKeys is a set of Pod label keys, like matchLabelKeys,

which looks up the values of these keys from the new Pod's labels, and merge them with labelSelector as key notin (value)

so that podAffinity does not match Pods that have the same key-value in labels.

Suppose all Pods for each tenant get tenant label via a controller or a manifest management tool like Helm.

Although the value of tenant label is unknown when composing each workload's manifest,

the cluster admin wants to achieve exclusive 1:1 tenant to domain placement for a tenant isolation.

mismatchLabelKeys works for this usecase;

By applying the following affinity globally using a mutating webhook,

the cluster admin can ensure that the Pods from the same tenant will land on the same domain exclusively,

meaning Pods from other tenants won't land on the same domain.

affinity:

podAffinity: # ensures the pods of this tenant land on the same node pool

requiredDuringSchedulingIgnoredDuringExecution:

- matchLabelKeys:

- tenant

topologyKey: node-pool

podAntiAffinity: # ensures only Pods from this tenant lands on the same node pool

requiredDuringSchedulingIgnoredDuringExecution:

- mismatchLabelKeys:

- tenant

labelSelector:

matchExpressions:

- key: tenant

operator: Exists

topologyKey: node-pool

The above matchLabelKeys and mismatchLabelKeys will be translated to like:

kind: Pod

metadata:

name: application-server

labels:

tenant: service-a

spec:

affinity:

podAffinity: # ensures the pods of this tenant land on the same node pool

requiredDuringSchedulingIgnoredDuringExecution:

- matchLabelKeys:

- tenant

topologyKey: node-pool

labelSelector:

matchExpressions:

- key: tenant

operator: In

values:

- service-a

podAntiAffinity: # ensures only Pods from this tenant lands on the same node pool

requiredDuringSchedulingIgnoredDuringExecution:

- mismatchLabelKeys:

- tenant

labelSelector:

matchExpressions:

- key: tenant

operator: Exists

- key: tenant

operator: NotIn

values:

- service-a

topologyKey: node-pool

Getting involved

These features are managed by Kubernetes SIG Scheduling.

Please join us and share your feedback. We look forward to hearing from you!

How can I learn more?

Kubernetes 1.31: Prevent PersistentVolume Leaks When Deleting out of Order

PersistentVolume (or PVs for short) are

associated with Reclaim Policy.

The reclaim policy is used to determine the actions that need to be taken by the storage

backend on deletion of the PVC Bound to a PV.

When the reclaim policy is Delete, the expectation is that the storage backend

releases the storage resource allocated for the PV. In essence, the reclaim

policy needs to be honored on PV deletion.

With the recent Kubernetes v1.31 release, a beta feature lets you configure your cluster to behave that way and honor the configured reclaim policy.

How did reclaim work in previous Kubernetes releases?

PersistentVolumeClaim (or PVC for short) is a user's request for storage. A PV and PVC are considered Bound if a newly created PV or a matching PV is found. The PVs themselves are backed by volumes allocated by the storage backend.

Normally, if the volume is to be deleted, then the expectation is to delete the PVC for a bound PV-PVC pair. However, there are no restrictions on deleting a PV before deleting a PVC.

First, I'll demonstrate the behavior for clusters running an older version of Kubernetes.

Retrieve a PVC that is bound to a PV

Retrieve an existing PVC example-vanilla-block-pvc

kubectl get pvc example-vanilla-block-pvc

The following output shows the PVC and its bound PV; the PV is shown under the VOLUME column:

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

example-vanilla-block-pvc Bound pvc-6791fdd4-5fad-438e-a7fb-16410363e3da 5Gi RWO example-vanilla-block-sc 19s

Delete PV

When I try to delete a bound PV, the kubectl session blocks and the kubectl

tool does not return back control to the shell; for example:

kubectl delete pv pvc-6791fdd4-5fad-438e-a7fb-16410363e3da

persistentvolume "pvc-6791fdd4-5fad-438e-a7fb-16410363e3da" deleted

^C

Retrieving the PV

kubectl get pv pvc-6791fdd4-5fad-438e-a7fb-16410363e3da

It can be observed that the PV is in a Terminating state

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-6791fdd4-5fad-438e-a7fb-16410363e3da 5Gi RWO Delete Terminating default/example-vanilla-block-pvc example-vanilla-block-sc 2m23s

Delete PVC

kubectl delete pvc example-vanilla-block-pvc

The following output is seen if the PVC gets successfully deleted:

persistentvolumeclaim "example-vanilla-block-pvc" deleted

The PV object from the cluster also gets deleted. When attempting to retrieve the PV it will be observed that the PV is no longer found:

kubectl get pv pvc-6791fdd4-5fad-438e-a7fb-16410363e3da

Error from server (NotFound): persistentvolumes "pvc-6791fdd4-5fad-438e-a7fb-16410363e3da" not found

Although the PV is deleted, the underlying storage resource is not deleted and needs to be removed manually.

To sum up, the reclaim policy associated with the PersistentVolume is currently

ignored under certain circumstances. For a Bound PV-PVC pair, the ordering of PV-PVC

deletion determines whether the PV reclaim policy is honored. The reclaim policy

is honored if the PVC is deleted first; however, if the PV is deleted prior to